预训练的先进AI模型集合。

关于ailia SDK

ailia SDK 是一个自包含、跨平台、高速推理的AI SDK。ailia SDK在Windows、Mac、Linux、iOS、Android、Jetson和Raspberry Pi平台上提供统一的C++ API。它还支持Unity(C#)、Python、Rust、Flutter(Dart)和JNI,以实现高效的AI应用。ailia SDK通过Vulkan和Metal广泛利用GPU,实现加速计算。

如何使用

新功能 - ailia SDK现在可以通过"pip3 install ailia"安装!

ailia MODELS教程

ailia MODELS教程 日文版

支持的模型

截至2024年8月9日有340个模型

最新更新

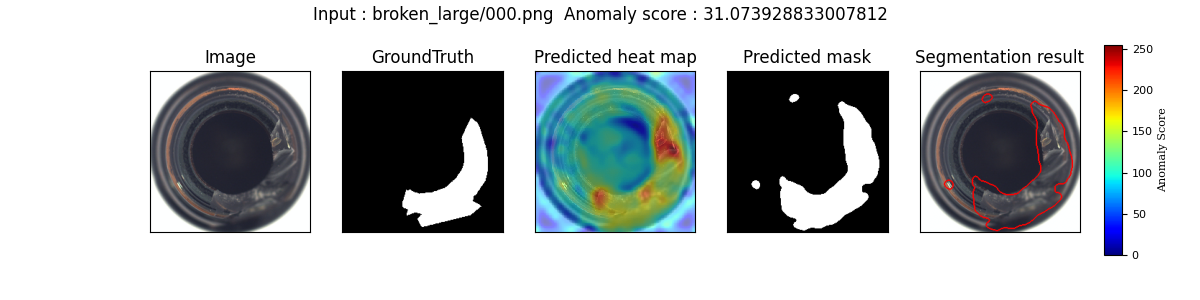

- 2024.08.09 添加mahalanobis-ad, t5_base_japanese_ner

- 2024.08.08 添加sdxl-turbo, sd-turbo

- 2024.08.05 从Transformers迁移到ailia Tokenizer 1.3

- 2024.07.16 添加grounded_sam

- 2024.07.12 添加llava

- 2024.07.09 添加GroundingDINO

- 更多信息请见我们的Wiki

动作识别

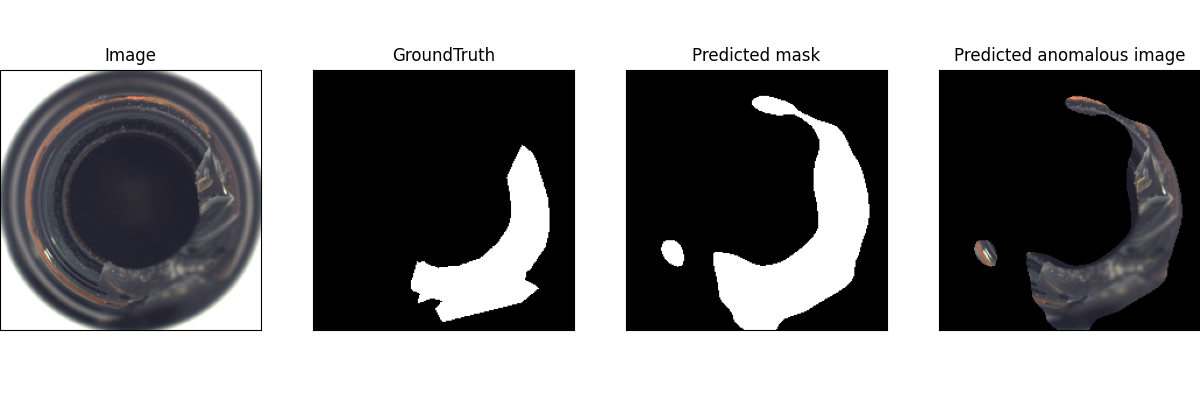

异常检测

音频处理

背景移除

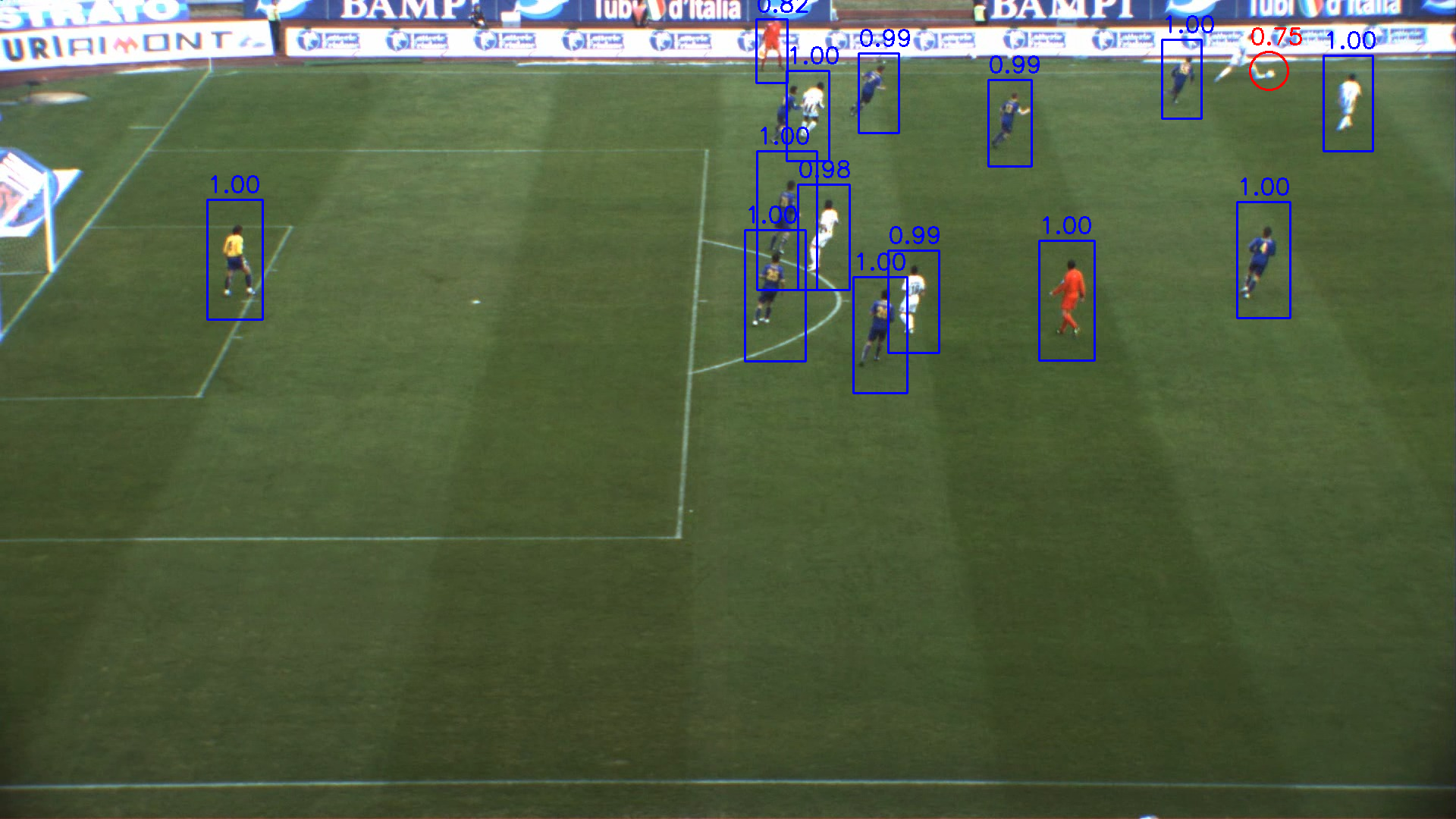

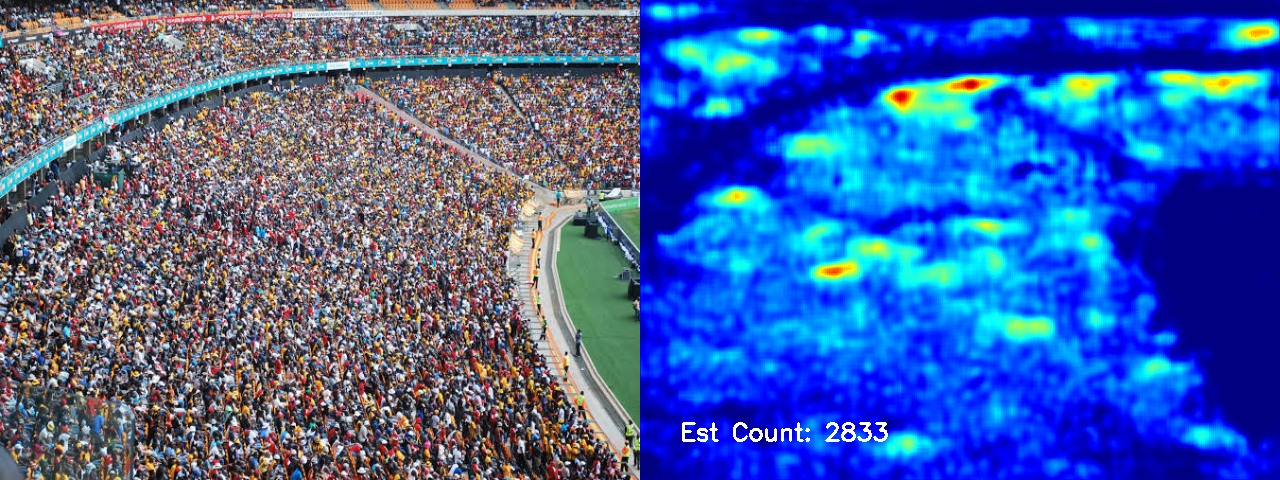

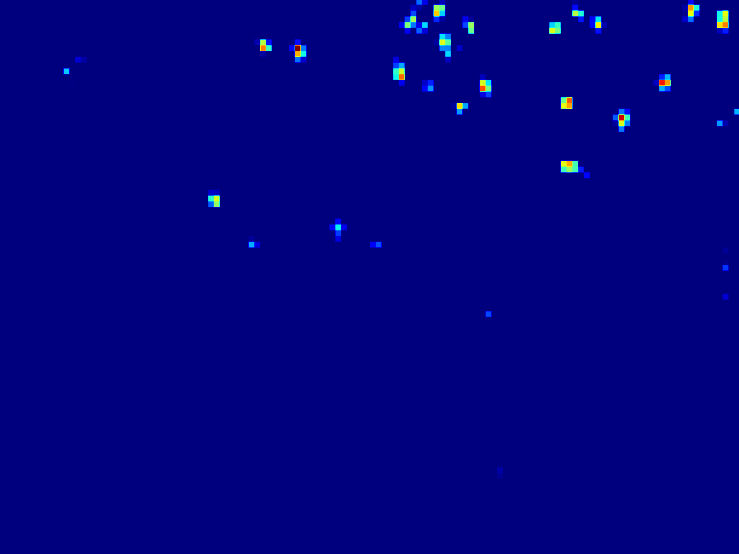

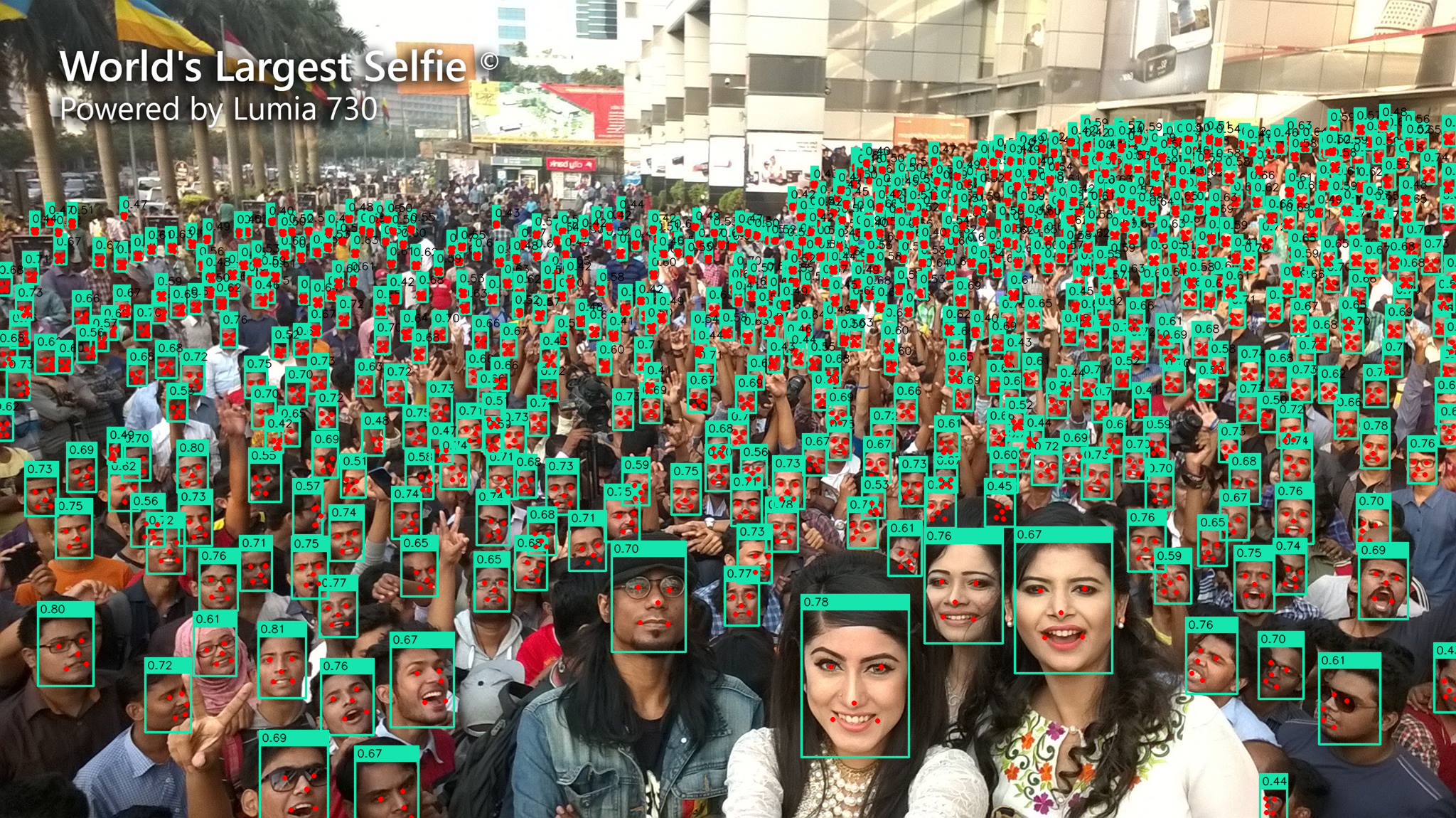

人群计数

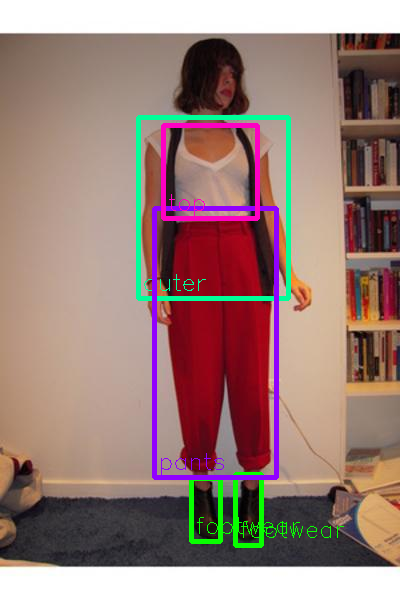

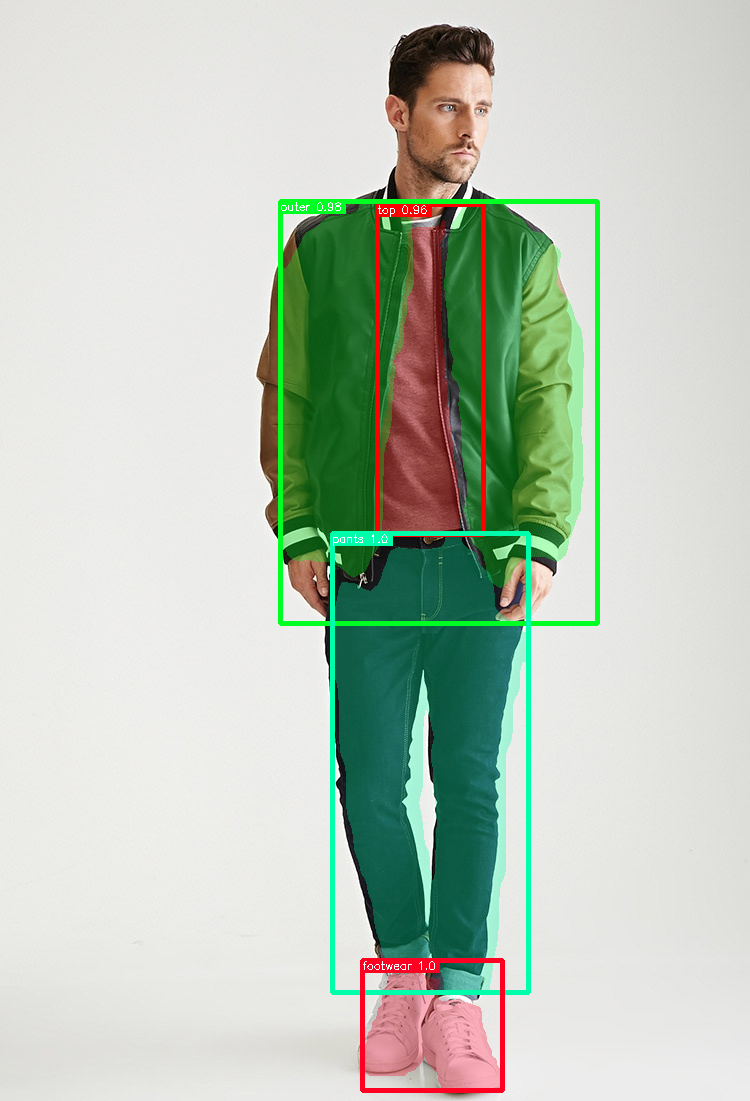

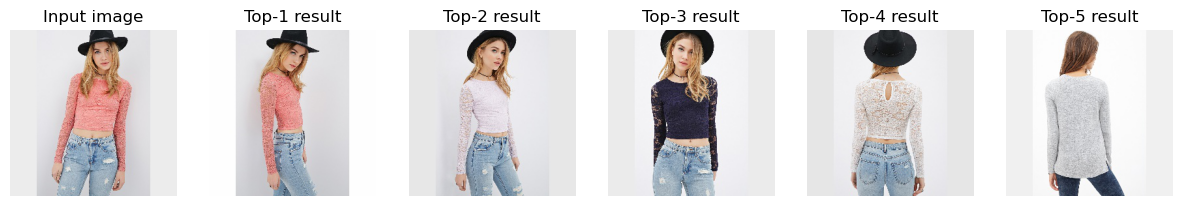

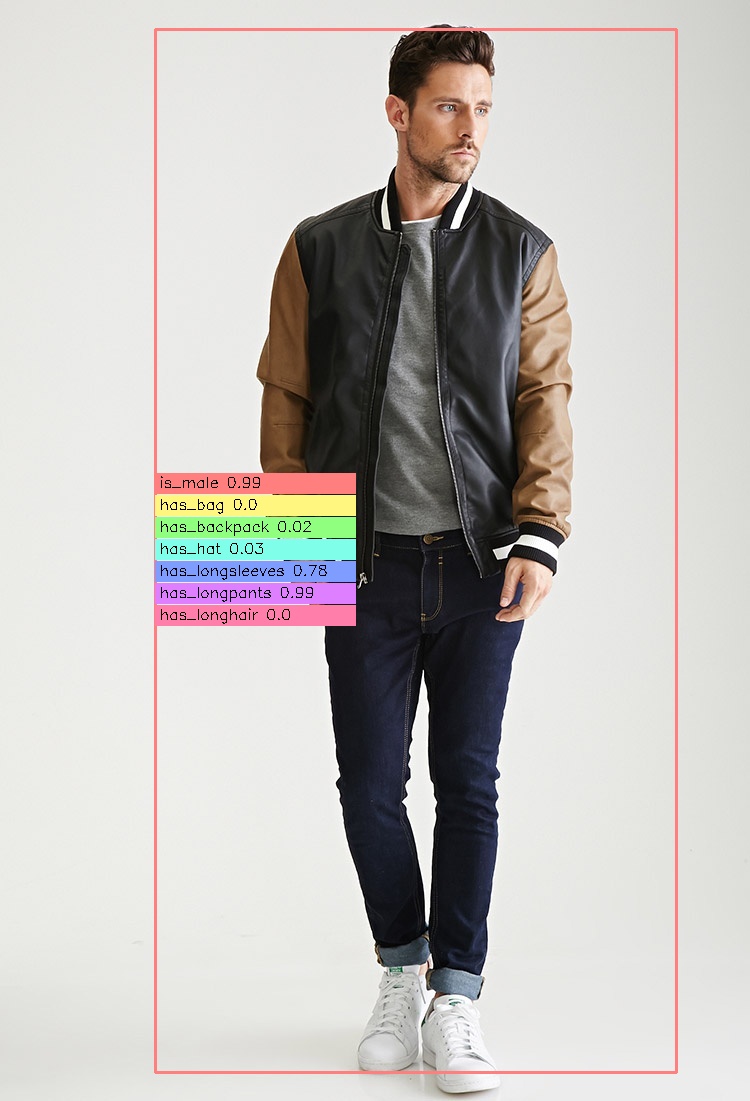

深度时尚

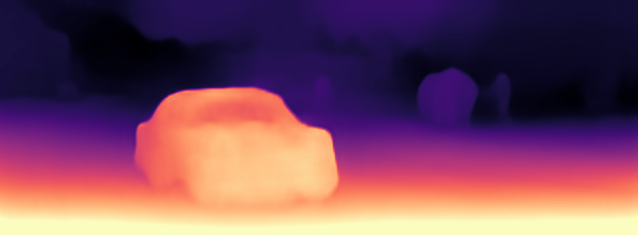

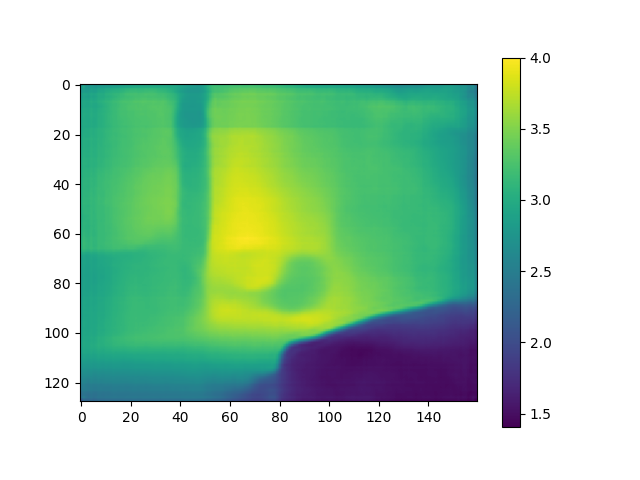

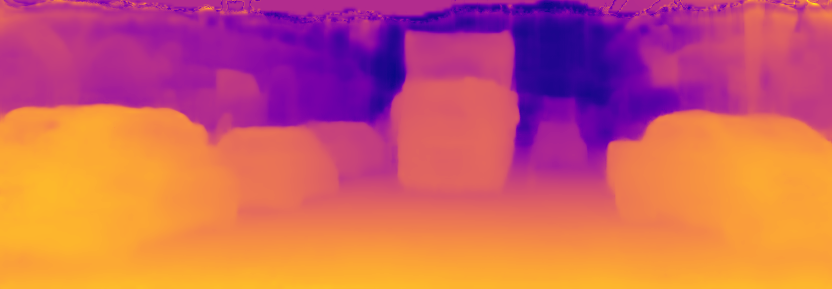

深度估计

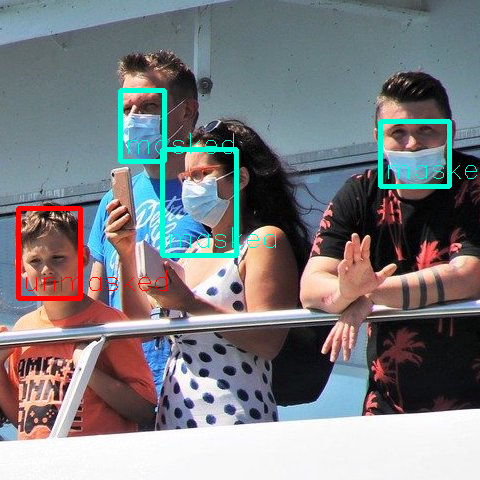

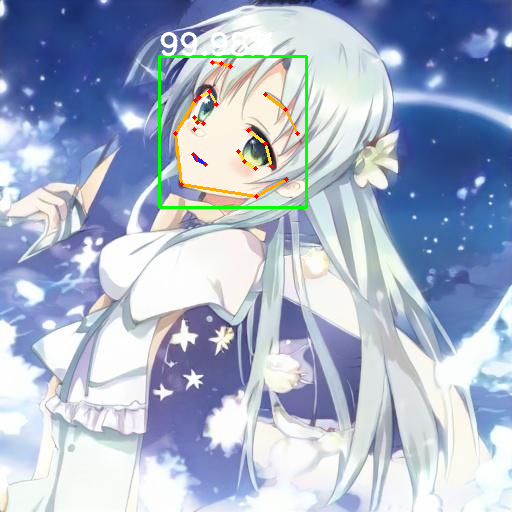

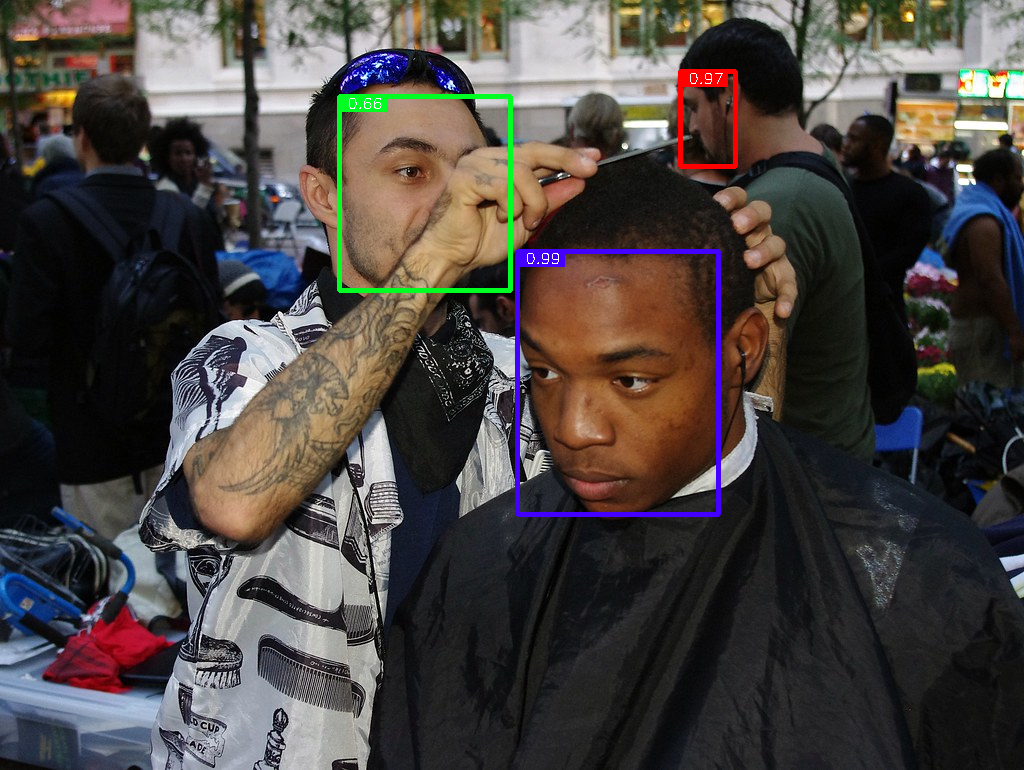

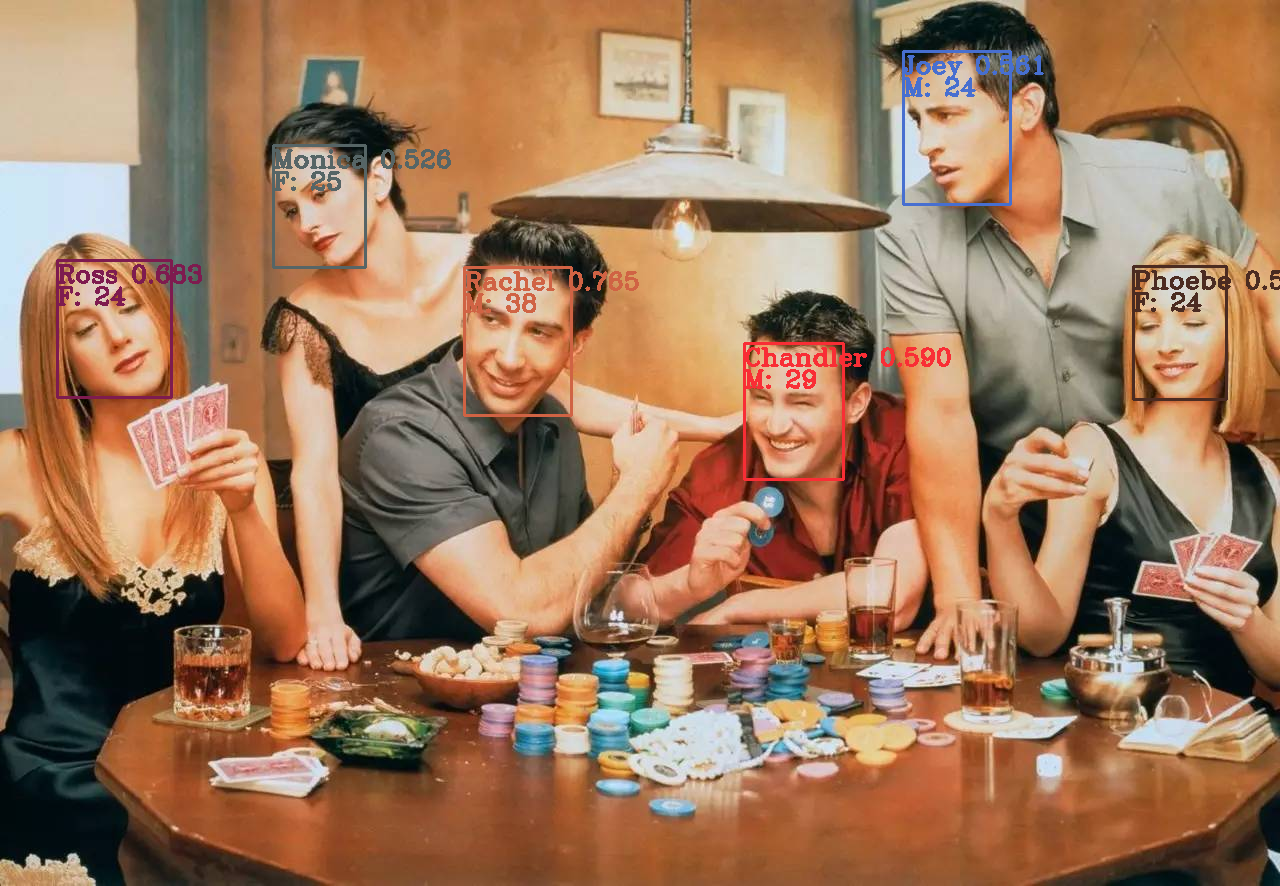

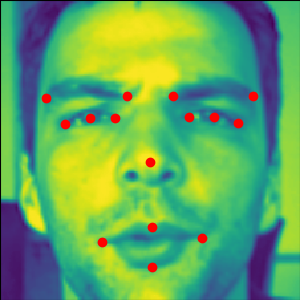

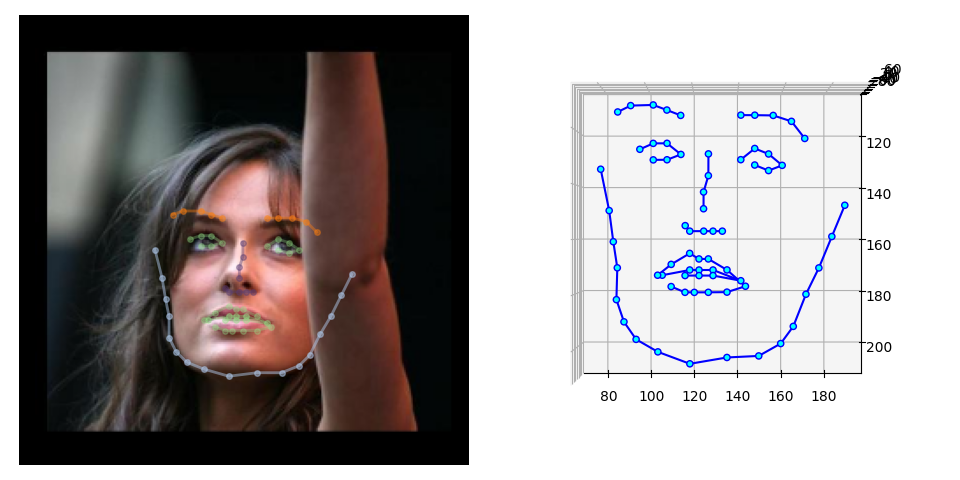

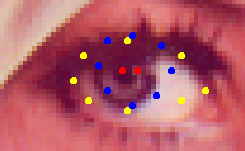

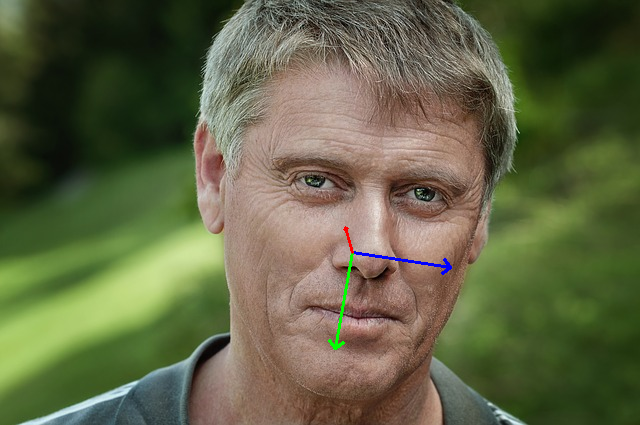

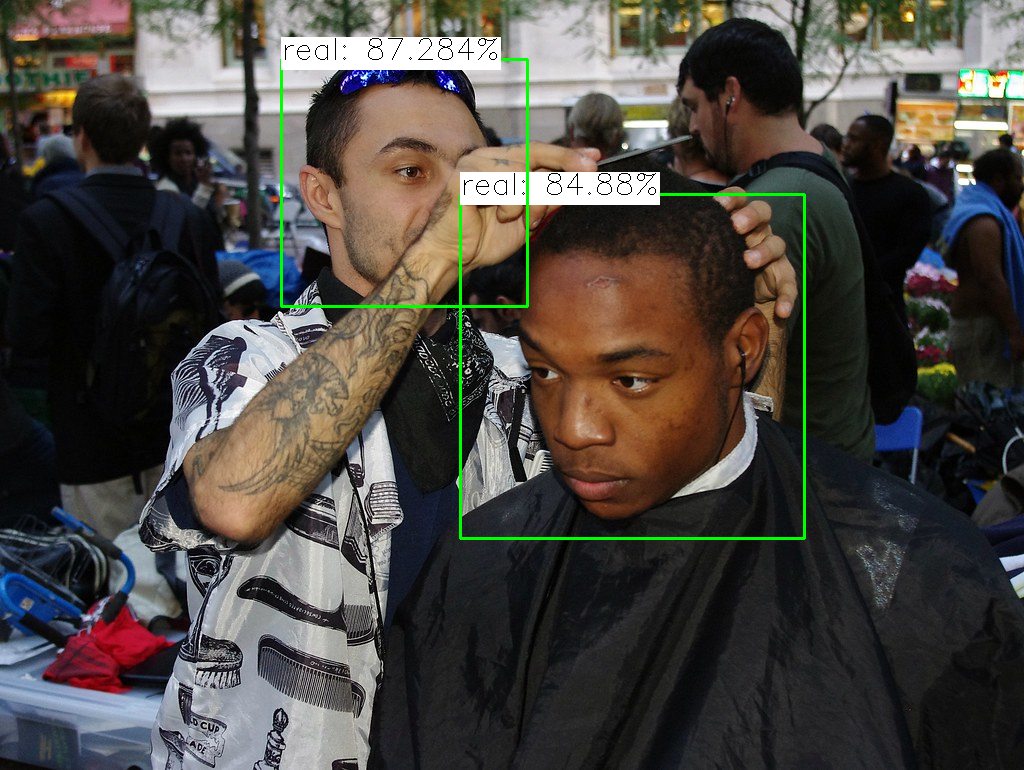

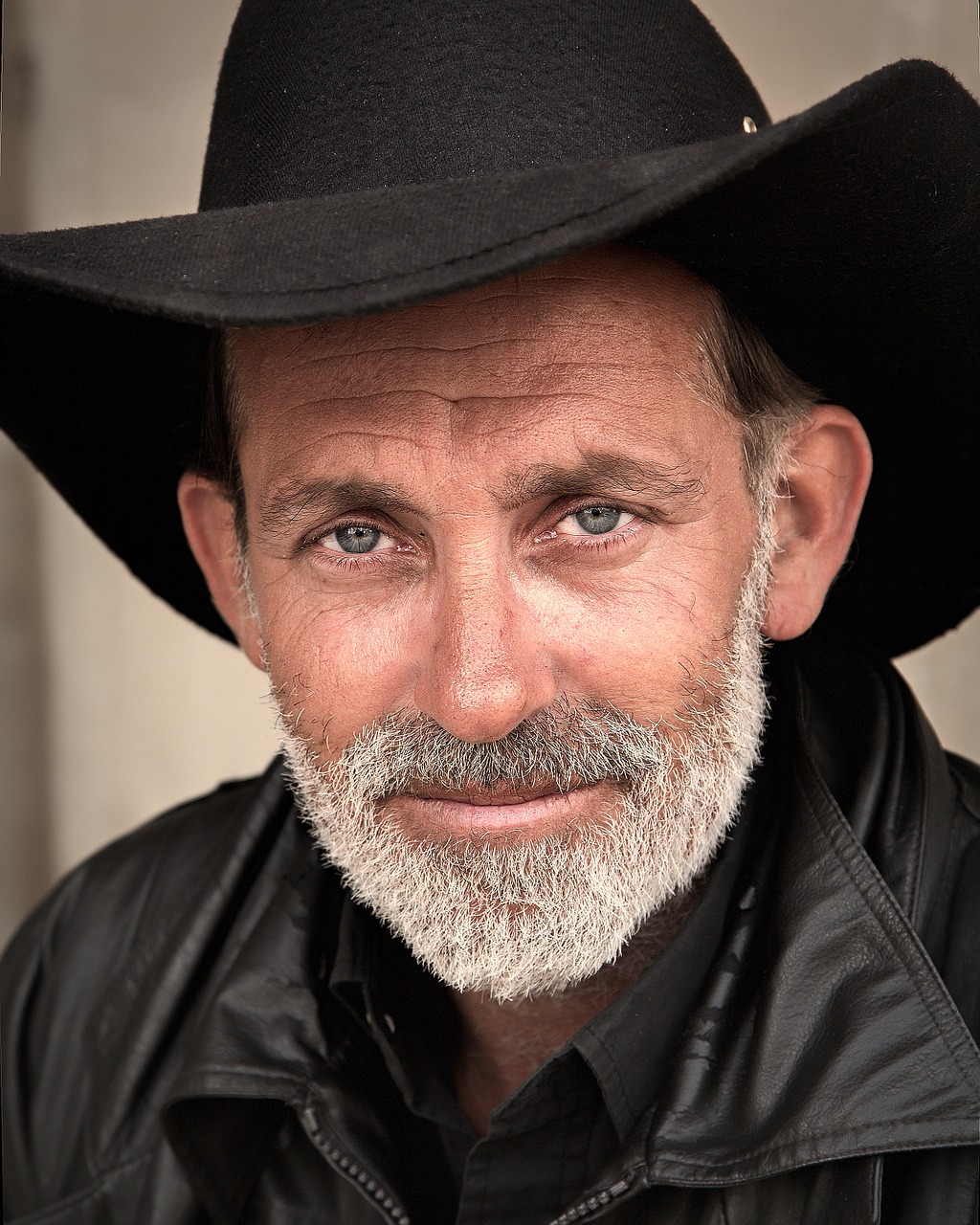

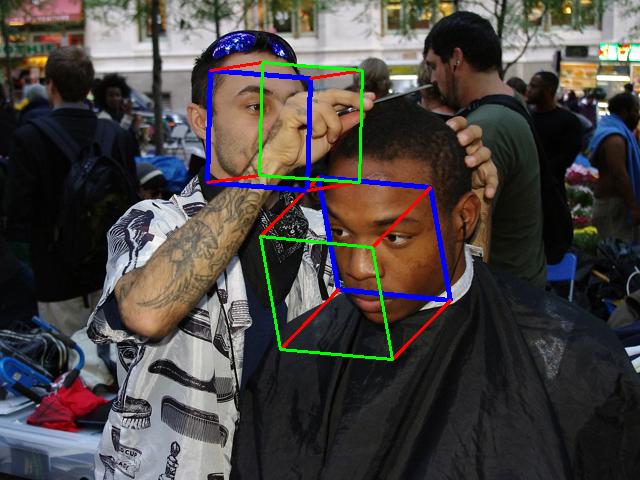

面部检测

人脸识别

面部交换

帧插值

生成对抗网络

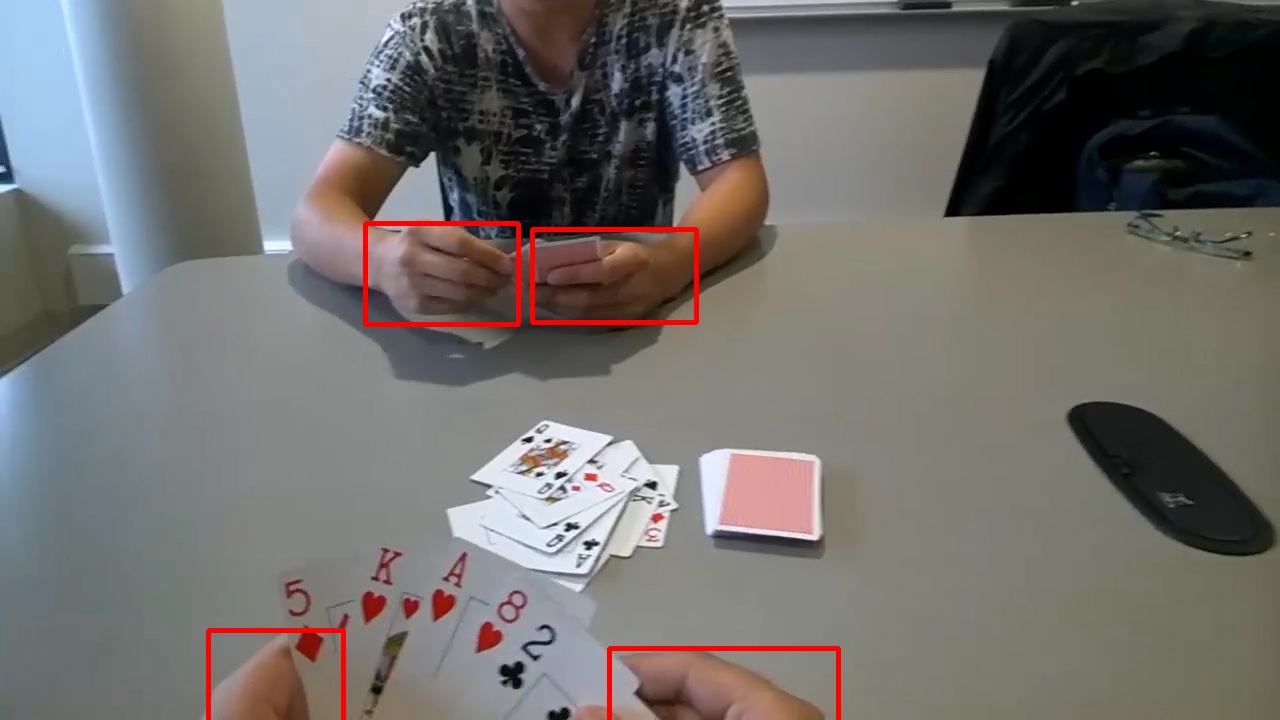

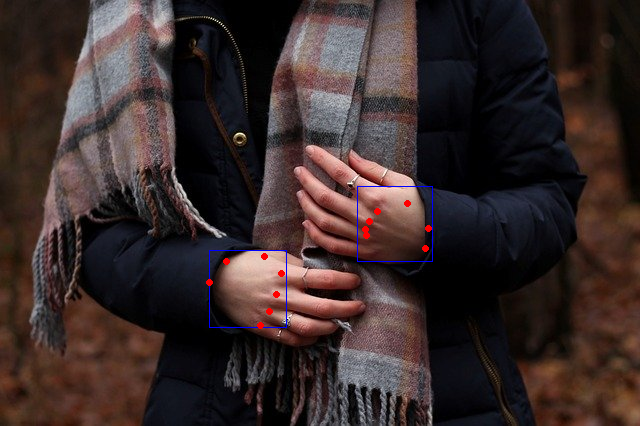

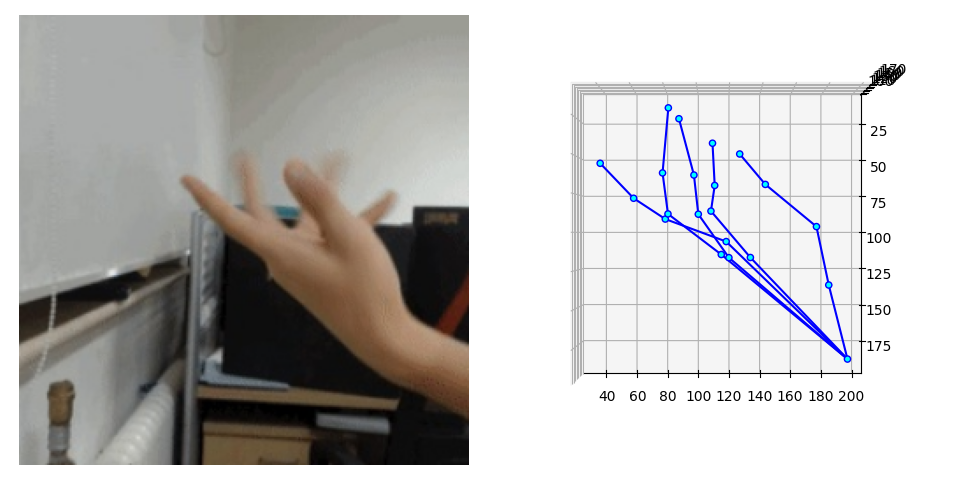

手势检测

手势识别

图像描述

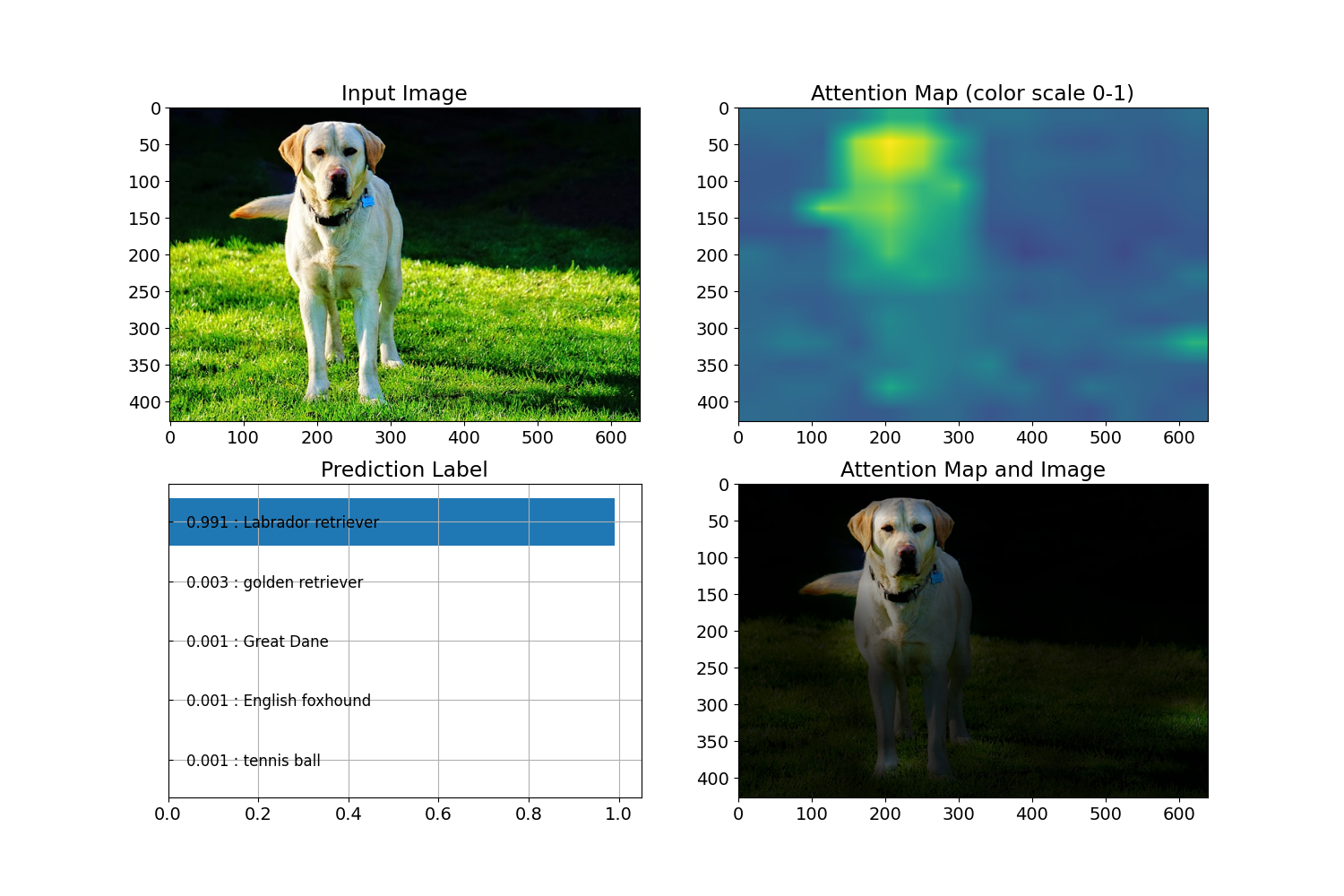

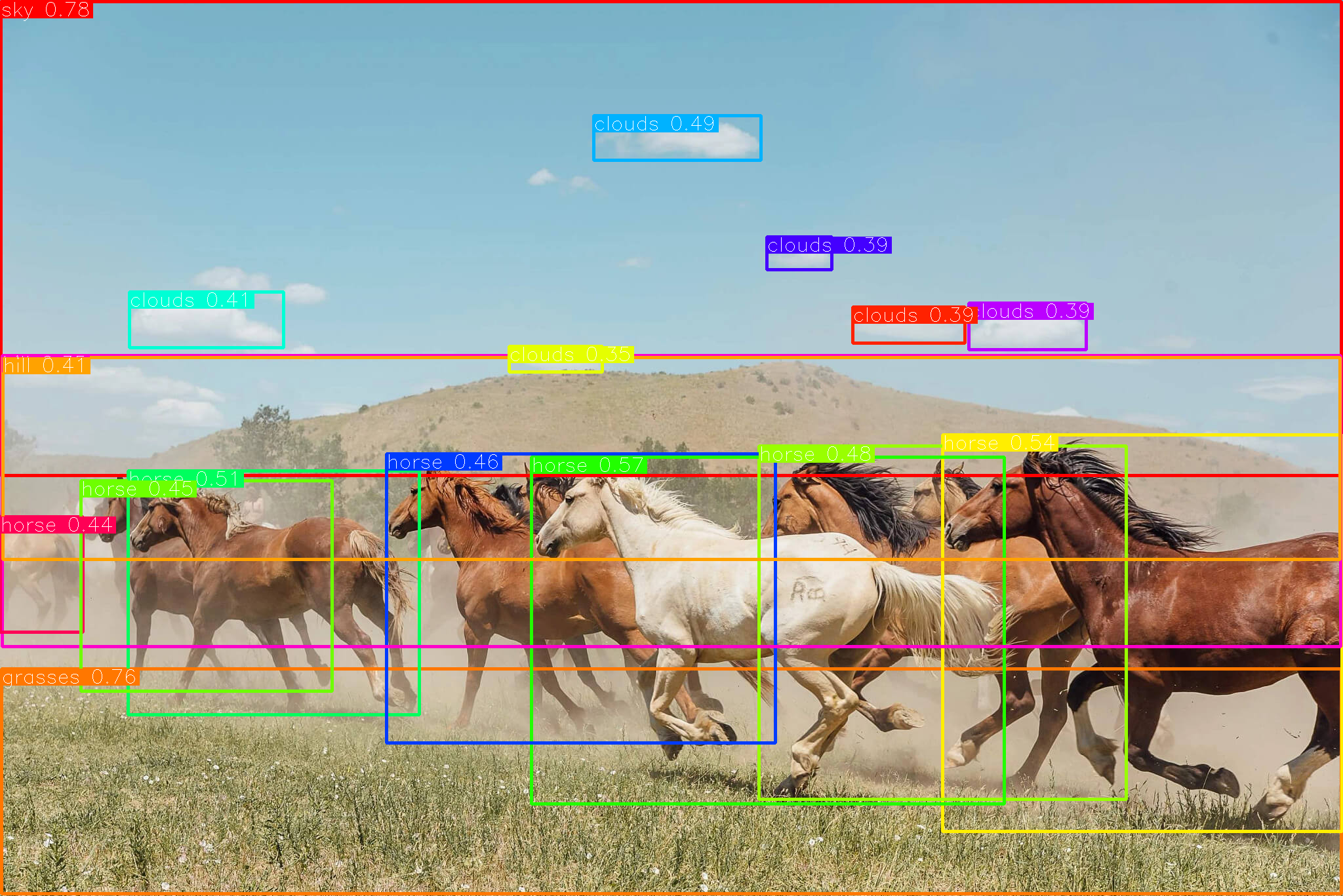

图像分类

图像修复

图像操作

图像修复

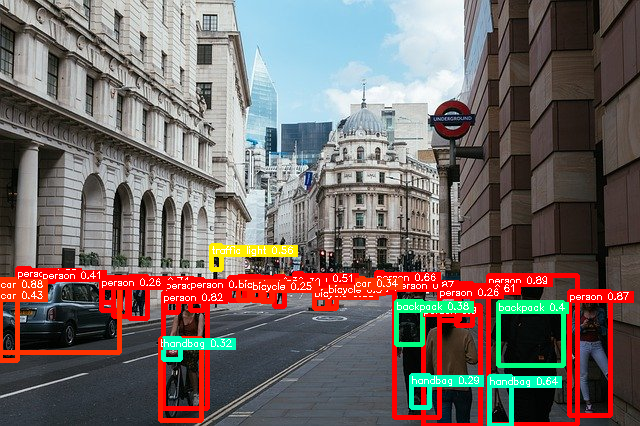

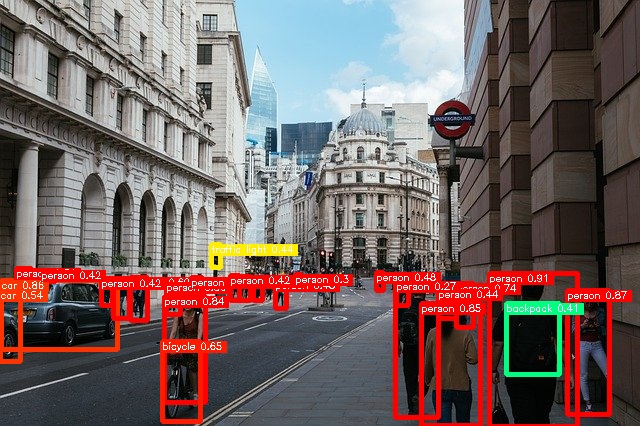

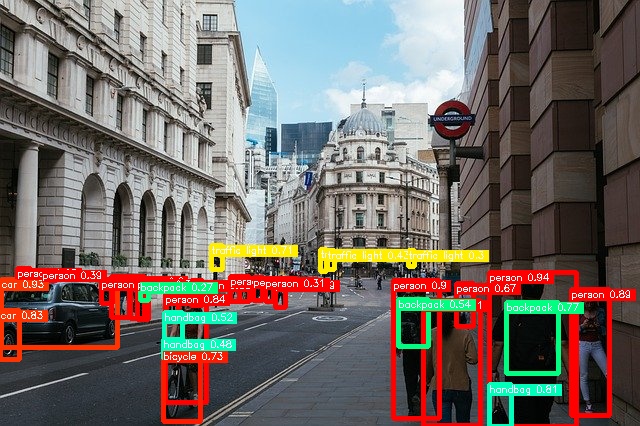

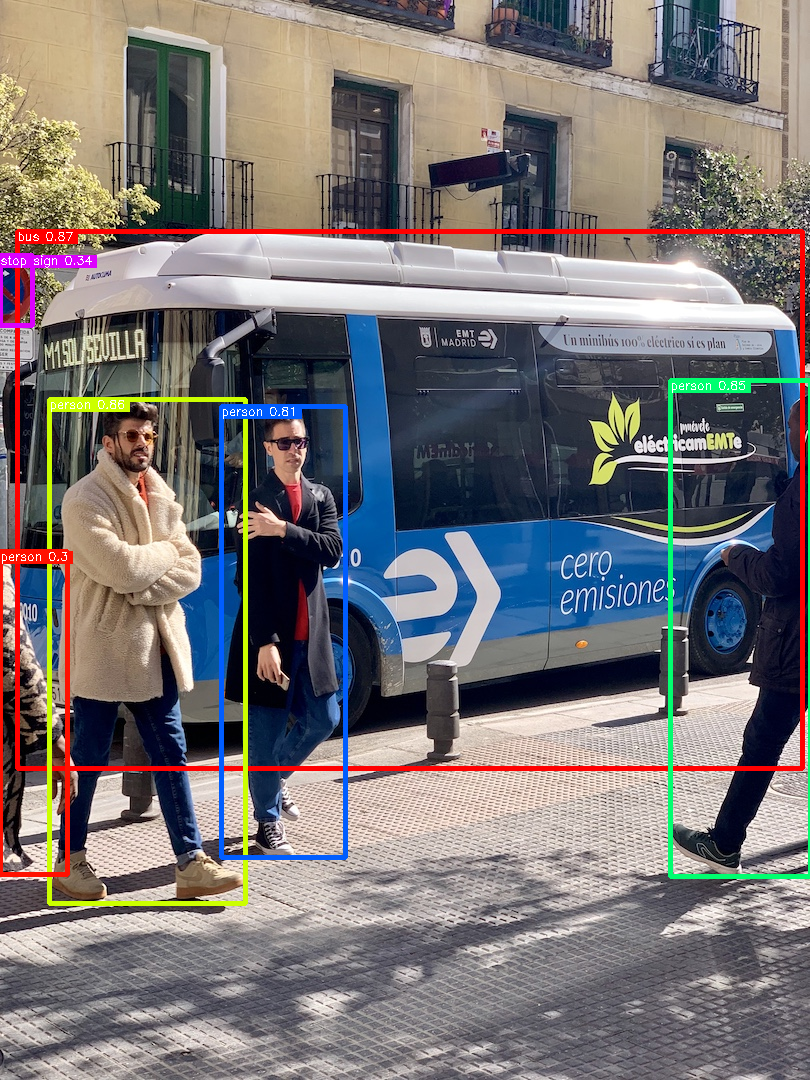

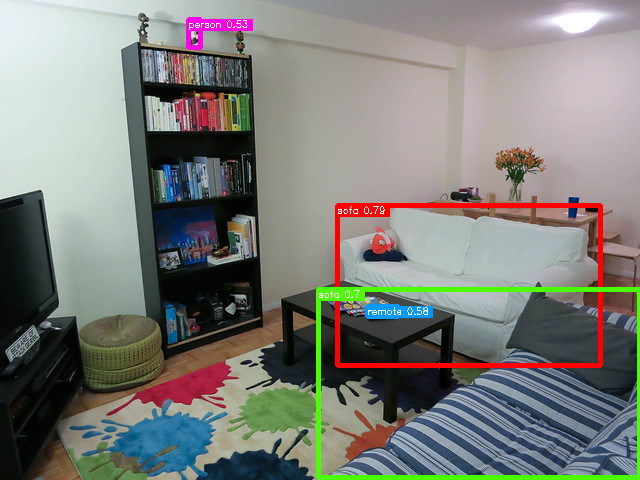

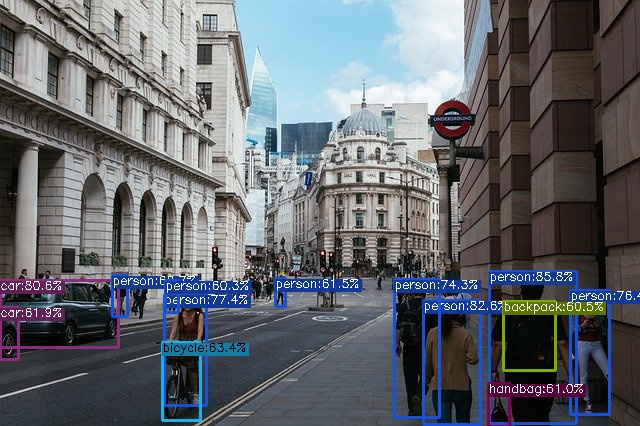

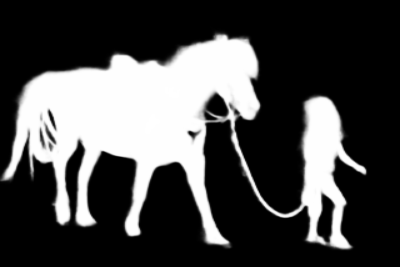

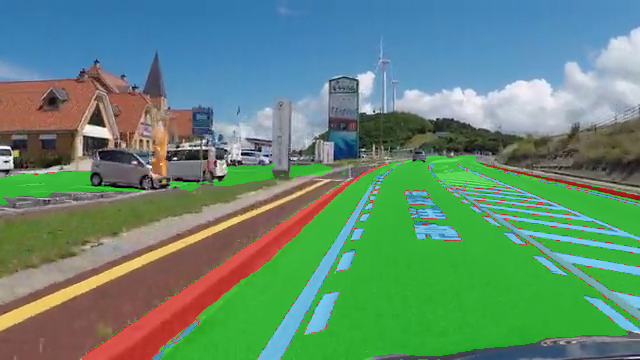

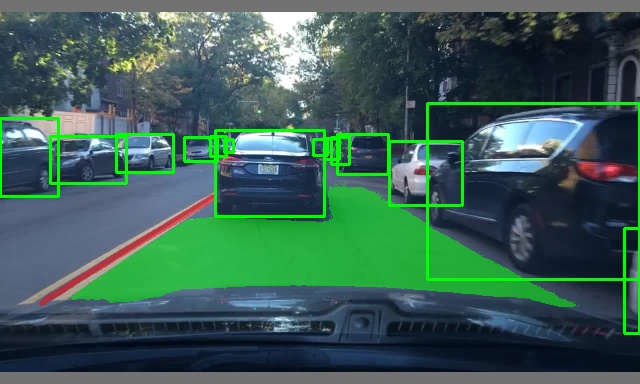

图像分割

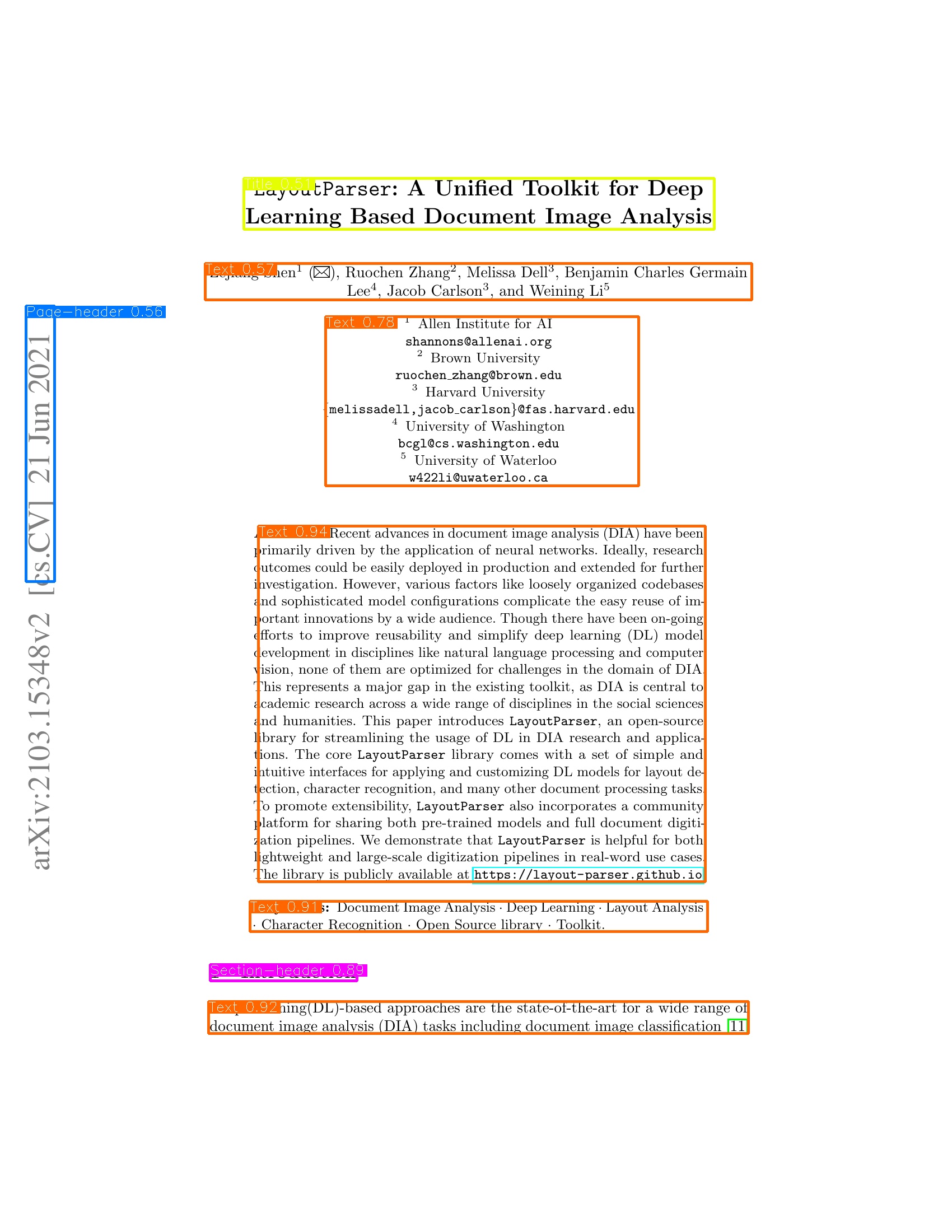

| | 模型 | 参考 | 导出自 | 支持的 Ailia 版本 | 博客 |

|:-----------|------------:|:------------:|:------------:|:------------:|:------------:|

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/86482ba2-87bc-4775-b500-084913324d00.png" width=128px>](image_segmentation/deeplabv3/) | [deeplabv3](/image_segmentation/deeplabv3/) | [DeepLab v3+的Xception65主干网络](https://github.com/tensorflow/models/tree/master/research/deeplab) | Chainer | 1.2.0及更高版本 |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/40d6b198-22e0-4a76-b050-ebc2515a97ee.png" width=128px>](image_segmentation/hrnet_segmentation/) | [hrnet_segmentation](/image_segmentation/hrnet_segmentation/) | [高分辨率网络(HRNets)用于语义分割](https://github.com/HRNet/HRNet-Semantic-Segmentation) | Pytorch | 1.2.1及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/1e320bcc-53eb-406d-aa8a-515fcd22eb59.png" width=128px>](image_segmentation/hair_segmentation/) | [hair_segmentation](/image_segmentation/hair_segmentation/) | [移动设备上的头发分割](https://github.com/thangtran480/hair-segmentation) | Keras | 1.2.1及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/04966dfb-1ece-4e10-81e3-3889bb017322.png" width=128px>](image_segmentation/pspnet-hair-segmentation/) | [pspnet-hair-segmentation](/image_segmentation/pspnet-hair-segmentation/) | [pytorch-头发分割](https://github.com/YBIGTA/pytorch-hair-segmentation) | Pytorch | 1.2.2及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/25de63d3-8862-4074-bca6-d44c10cc2650.png" width=128px>](image_segmentation/human_part_segmentation/) | [human_part_segmentation](/image_segmentation/human_part_segmentation/) | [人体解析的自我纠正](https://github.com/PeikeLi/Self-Correction-Human-Parsing) | Pytorch | 1.2.4及更高版本 | [EN](https://medium.com/axinc-ai/humanpartsegmentation-a-machine-learning-model-for-segmenting-human-parts-cd7e39480714) [JP](https://medium.com/axinc/humanpartsegmentation-%E5%8B%95%E7%94%BB%E3%81%8B%E3%82%89%E4%BD%93%E3%81%AE%E9%83%A8%E4%BD%8D%E3%82%92%E3%82%BB%E3%82%B0%E3%83%A1%E3%83%B3%E3%83%86%E3%83%BC%E3%82%B7%E3%83%A7%E3%83%B3%E3%81%99%E3%82%8B%E6%A9%9F%E6%A2%B0%E5%AD%A6%E7%BF%92%E3%83%A2%E3%83%87%E3%83%AB-e8a0e405255) |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/86449775-771e-413d-928f-49cf76ba9ffd.png" width=128px>](image_segmentation/semantic-segmentation-mobilenet-v3/) | [semantic-segmentation-mobilenet-v3](/image_segmentation/semantic-segmentation-mobilenet-v3) | [使用MobileNetV3进行语义分割](https://github.com/OniroAI/Semantic-segmentation-with-MobileNetV3) | TensorFlow | 1.2.5及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/0e4dd8bc-92a9-4125-bb4c-d30f23e1213b.jpg" width=128px>](image_segmentation/pytorch-unet/) | [pytorch-unet](/image_segmentation/pytorch-unet/) | [Pytorch-Unet](https://github.com/milesial/Pytorch-UNet) | Pytorch | 1.2.5及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/c181f4bc-2cfa-4804-aeeb-60267951122f.png" width=128px>](image_segmentation/pytorch-enet/) | [pytorch-enet](/image_segmentation/pytorch-enet/) | [PyTorch-ENet](https://github.com/davidtvs/PyTorch-ENet) | Pytorch | 1.2.8及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/2662f1e7-4ee3-4091-b743-4000ae4335c2.png" width=128px>](image_segmentation/yet-another-anime-segmenter/) | [yet-another-anime-segmenter](/image_segmentation/yet-another-anime-segmenter/) | [Yet Another Anime Segmenter](https://github.com/zymk9/Yet-Another-Anime-Segmenter) | Pytorch | 1.2.6及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/6dc9b9f1-6ac0-492d-82b7-1317637fd6b6.png" width=128px>](image_segmentation/swiftnet/) | [swiftnet](/image_segmentation/swiftnet/) | [SwiftNet](https://github.com/orsic/swiftnet) | Pytorch | 1.2.6及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/926da1b5-88a0-4188-bbd4-9043c5afc654.png" width=128px>](image_segmentation/dense_prediction_transformers/) | [dense_prediction_transformers](/image_segmentation/dense_prediction_transformers/) | [用于密集预测的视觉Transformer](https://github.com/intel-isl/DPT) | Pytorch | 1.2.7及更高版本 | [EN](https://medium.com/axinc-ai/dpt-segmentation-model-using-vision-transformer-b479f3027468) [JP](https://medium.com/axinc/dpt-vision-transformer%E3%82%92%E4%BD%BF%E7%94%A8%E3%81%97%E3%81%9F%E3%82%BB%E3%82%B0%E3%83%A1%E3%83%B3%E3%83%86%E3%83%BC%E3%82%B7%E3%83%A7%E3%83%B3%E3%83%A2%E3%83%87%E3%83%AB-88db4842b4a7) |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/5d7ebfb6-aa18-4f59-b800-60637ae534d8.png" width=128px>](image_segmentation/paddleseg/) | [paddleseg](/image_segmentation/paddleseg/) | [PaddleSeg](https://github.com/PaddlePaddle/PaddleSeg/tree/release/2.3/contrib/CityscapesSOTA) | Pytorch | 1.2.7及更高版本 | [EN](https://medium.com/axinc-ai/paddleseg-highly-accurate-segmentation-model-using-hierarchical-attention-18e69363dc2a) [JP](https://medium.com/axinc/paddleseg-%E9%9A%8E%E5%B1%A4%E7%9A%84%E3%81%AA%E3%82%A2%E3%83%86%E3%83%B3%E3%82%B7%E3%83%A7%E3%83%B3%E3%82%92%E4%BD%BF%E7%94%A8%E3%81%97%E3%81%9F%E9%AB%98%E7%B2%BE%E5%BA%A6%E3%81%AA%E3%82%BB%E3%82%B0%E3%83%A1%E3%83%B3%E3%83%86%E3%83%BC%E3%82%B7%E3%83%A7%E3%83%B3%E3%83%A2%E3%83%87%E3%83%AB-acc89bf50423) |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/49c8de46-512a-46e2-bee5-6965a9cb12ee.png" width=128px>](image_segmentation/pp_liteseg/) | [pp_liteseg](/image_segmentation/pp_liteseg/) | [PP-LiteSeg](https://github.com/PaddlePaddle/PaddleSeg/tree/develop/configs/pp_liteseg) | Pytorch | 1.2.10及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/dabc6212-5b12-46da-8f1b-a0660e55b638.jpg" width=128px>](image_segmentation/suim/) | [suim](/image_segmentation/suim/) | [SUIM](https://github.com/IRVLab/SUIM) | Keras | 1.2.6及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/724e5404-a272-4eba-afa8-eb7dbf0f3472.png" width=128px>](image_segmentation/group_vit/) | [group_vit](/image_segmentation/group_vit/) | [GroupViT](https://github.com/NVlabs/GroupViT) | Pytorch | 1.2.10及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/de9b5887-9c5a-4f46-ba5c-519b575b8409.png" width=128px>](image_segmentation/anime-segmentation/) | [anime-segmentation](/image_segmentation/anime-segmentation/) | [动漫分割](https://github.com/SkyTNT/anime-segmentation) | Pytorch | 1.2.9及更高版本 |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/00b27b55-6b7d-45f0-9401-4fa29c4d3b86.png" width=128px>](image_segmentation/segment-anything/) | [segment-anything](/image_segmentation/segment-anything/) | [Segment Anything](https://github.com/facebookresearch/segment-anything) | Pytorch | 1.2.16及更高版本 |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/e3a704dc-e733-40d5-bf3b-047adb2218e3.png" width=128px>](image_segmentation/tusimple-DUC/) | [tusimple-DUC](/image_segmentation/tusimple-DUC/) | [TuSimple-DUC](https://github.com/TuSimple/TuSimple-DUC) | Pytorch | 1.2.10及更高版本 | |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/2e9421ff-ba09-4528-a194-6c68bf5b6ac5.jpg" width=128px>](image_segmentation/pytorch-fcn/) | [pytorch-fcn](/image_segmentation/pytorch-fcn/) | [pytorch-fcn](https://github.com/wkentaro/pytorch-fcn) | Pytorch | 1.3.0及更高版本 |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/fc795dec-3e8f-4a08-b243-75d1b0a7a240.png" width=128px>](image_segmentation/grounded_sam/) | [grounded_sam](/image_segmentation/grounded_sam/) | [Grounded-SAM](https://github.com/IDEA-Research/Grounded-Segment-Anything/tree/main) | Pytorch | 1.2.16及更高版本 |

## 大型语言模型

| 模型 | 参考 | 导出自 | 支持的 Ailia 版本 | 博客 |

|------------:|:------------:|:------------:|:------------:|:------------:|

|[llava](/large_language_model/llava) | [LLaVA](https://github.com/haotian-liu/LLaVA) | Pytorch | 1.2.16及更高版本 | |

## 地标分类

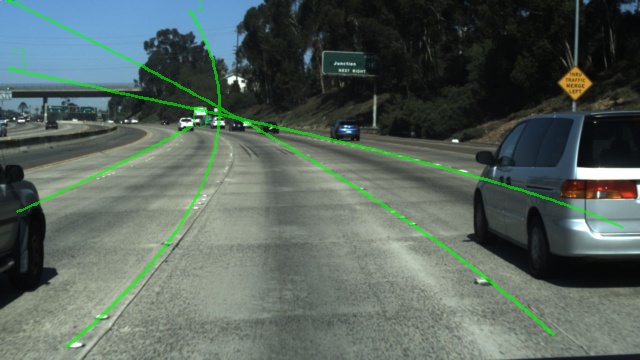

线段检测

低光照图像增强

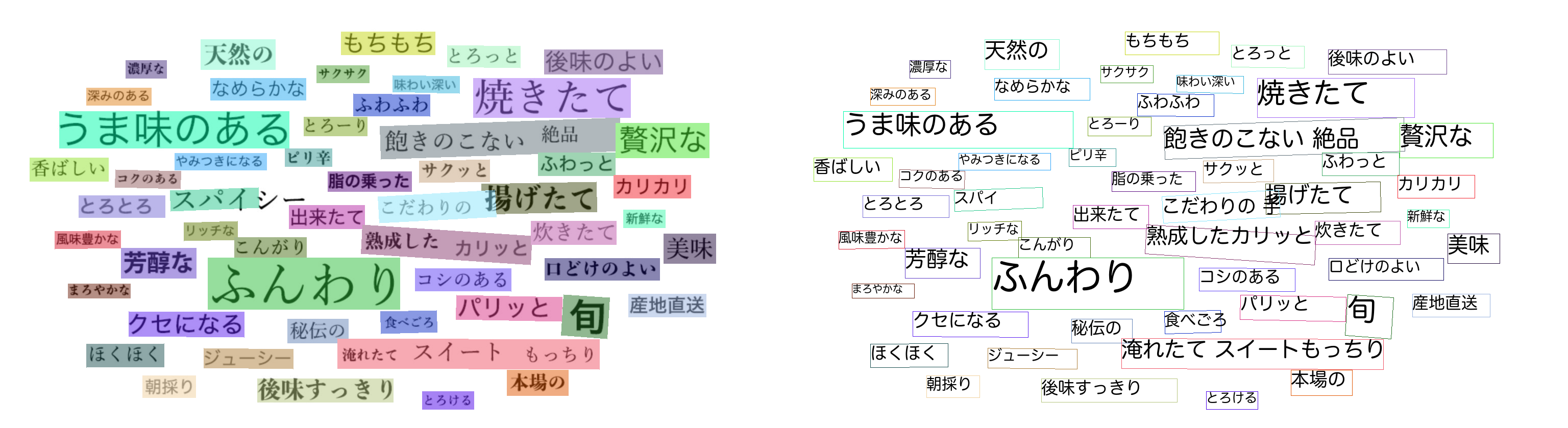

自然语言处理

| Model | 参考资料 | 导出平台 | 支持的 Ailia 版本 | 博客 |

|------------:|:------------:|:------------:|:------------:|:------------:|

|[bert](/natural_language_processing/bert) | [pytorch-pretrained-bert](https://pypi.org/project/pytorch-pretrained-bert/) | Pytorch | 1.2.2 或更高 | [EN](https://medium.com/axinc-ai/bert-a-machine-learning-model-for-efficient-natural-language-processing-aef3081c24e8) [JP](https://medium.com/axinc/bert-%E8%87%AA%E7%84%B6%E8%A8%80%E8%AA%9E%E5%87%A6%E7%90%86%E3%82%92%E5%8A%B9%E7%8E%87%E7%9A%84%E3%81%AB%E5%AD%A6%E7%BF%92%E3%81%99%E3%82%8B%E6%A9%9F%E6%A2%B0%E5%AD%A6%E7%BF%92%E3%83%A2%E3%83%87%E3%83%AB-3a9c27d78cf8) |

|[bert_maskedlm](/natural_language_processing/bert_maskedlm) | [huggingface/transformers](https://github.com/huggingface/transformers) | Pytorch | 1.2.5 或更高 |

|[bert_ner](/natural_language_processing/bert_ner) | [huggingface/transformers](https://github.com/huggingface/transformers) | Pytorch | 1.2.5 或更高 |

|[bert_question_answering](/natural_language_processing/bert_question_answering) | [huggingface/transformers](https://github.com/huggingface/transformers) | Pytorch | 1.2.5 或更高 |

|[bert_sentiment_analysis](/natural_language_processing/bert_sentiment_analysis) | [huggingface/transformers](https://github.com/huggingface/transformers) | Pytorch | 1.2.5 或更高 |

|[bert_zero_shot_classification](/natural_language_processing/bert_zero_shot_classification) | [huggingface/transformers](https://github.com/huggingface/transformers) | Pytorch | 1.2.5 或更高 |

|[bert_tweets_sentiment](/natural_language_processing/bert_tweets_sentiment) | [huggingface/transformers](https://github.com/huggingface/transformers) | Pytorch | 1.2.5 或更高 |

|[gpt2](/natural_language_processing/gpt2) | [GPT-2](https://github.com/onnx/models/blob/master/text/machine_comprehension/gpt-2/README.md) | Pytorch | 1.2.7 或更高 |

|[rinna_gpt2](/natural_language_processing/rinna_gpt2) | [japanese-pretrained-models](https://github.com/rinnakk/japanese-pretrained-models) | Pytorch | 1.2.7 或更高 |

|[fugumt-en-ja](/natural_language_processing/fugumt-en-ja) | [Fugu-Machine Translator](https://github.com/s-taka/fugumt) | Pytorch | 1.2.9 或更高 | [JP](https://medium.com/axinc/fugumt-%E8%8B%B1%E8%AA%9E%E3%81%8B%E3%82%89%E6%97%A5%E6%9C%AC%E8%AA%9E%E3%81%B8%E3%81%AE%E7%BF%BB%E8%A8%B3%E3%82%92%E8%A1%8C%E3%81%86%E6%A9%9F%E6%A2%B0%E5%AD%A6%E7%BF%92%E3%83%A2%E3%83%87%E3%83%AB-46b839c1b4ae) |

|[fugumt-ja-en](/natural_language_processing/fugumt-ja-en) | [Fugu-Machine Translator](https://github.com/s-taka/fugumt) | Pytorch | 1.2.10 或更高 |

|[bert_sum_ext](/natural_language_processing/bert_sum_ext) | [BERTSUMEXT](https://github.com/dmmiller612/bert-extractive-summarizer) | Pytorch | 1.2.7 或更高 |

|[sentence_transformers_japanese](/natural_language_processing/sentence_transformers_japanese) | [sentence transformers](https://huggingface.co/sentence-transformers/paraphrase-multilingual-mpnet-base-v2) | Pytorch | 1.2.7 或更高 | [JP](https://medium.com/axinc/sentencetransformer-%E3%83%86%E3%82%AD%E3%82%B9%E3%83%88%E3%81%8B%E3%82%89embedding%E3%82%92%E5%8F%96%E5%BE%97%E3%81%99%E3%82%8B%E8%A8%80%E8%AA%9E%E5%87%A6%E7%90%86%E3%83%A2%E3%83%87%E3%83%AB-b7d2a9bb2c31) |

|[presumm](/natural_language_processing/presumm) | [PreSumm](https://github.com/nlpyang/PreSumm) | Pytorch | 1.2.8 或更高|

|[t5_base_japanese_title_generation](/natural_language_processing/t5_base_japanese_title_generation) | [t5-japanese](https://github.com/sonoisa/t5-japanese) | Pytorch | 1.2.13 或更高 | [JP](https://medium.com/axinc/t5-%E3%83%86%E3%82%AD%E3%82%B9%E3%83%88%E3%81%8B%E3%82%89%E3%83%86%E3%82%AD%E3%82%B9%E3%83%88%E3%82%92%E7%94%9F%E6%88%90%E3%81%99%E3%82%8B%E6%A9%9F%E6%A2%B0%E5%AD%A6%E7%BF%92%E3%83%A2%E3%83%87%E3%83%AB-602830bdc5b4) |

|[bertjsc](/natural_language_processing/bertjsc) | [bertjsc](https://github.com/er-ri/bertjsc) | Pytorch | 1.2.15 或更高 |

|[multilingual-e5](/natural_language_processing/multilingual-e5) | [multilingual-e5-base](https://huggingface.co/intfloat/multilingual-e5-base) | Pytorch | 1.2.15 或更高 | [JP](https://medium.com/axinc/multilingual-e5-%E5%A4%9A%E8%A8%80%E8%AA%9E%E3%81%AE%E3%83%86%E3%82%AD%E3%82%B9%E3%83%88%E3%82%92embedding%E3%81%99%E3%82%8B%E6%A9%9F%E6%A2%B0%E5%AD%A6%E7%BF%92%E3%83%A2%E3%83%87%E3%83%AB-71f1dec7c4f0) |

|[bert_insert_punctuation](/natural_language_processing/bert_insert_punctuation) | [bert-japanese](https://github.com/cl-tohoku/bert-japanese) | Pytorch | 1.2.15 或更高 |

|[t5_whisper_medical](/natural_language_processing/t5_whisper_medical) | 使用 t5 进行医学术语纠错 | Pytorch | 1.2.13 或更高 | |

|[t5_base_summarization](/natural_language_processing/t5_base_japanese_summarization) | [t5-japanese](https://github.com/sonoisa/t5-japanese) | Pytorch | 1.2.13 或更高 |

|[glucose](/natural_language_processing/glucose) | [GLuCoSE (General Luke-based Contrastive Sentence Embedding)-base-Japanese](https://huggingface.co/pkshatech/GLuCoSE-base-ja) | Pytorch | 1.2.15 或更高 |

|[cross_encoder_mmarco](/natural_language_processing/cross_encoder_mmarco) | [jeffwan/mmarco-mMiniLMv2-L12-H384-v](https://huggingface.co/jeffwan/mmarco-mMiniLMv2-L12-H384-v1) | Pytorch | 1.2.10 或更高 | [JP](https://medium.com/axinc/crossencodermmarco-%E8%B3%AA%E5%95%8F%E6%96%87%E3%81%A8%E5%9B%9E%E7%AD%94%E6%96%87%E3%81%AE%E9%A1%9E%E4%BC%BC%E5%BA%A6%E3%82%92%E8%A8%88%E7%AE%97%E3%81%99%E3%82%8B%E6%A9%9F%E6%A2%B0%E5%AD%A6%E7%BF%92%E3%83%A2%E3%83%87%E3%83%AB-c90b35e9fc09)|

|[soundchoice-g2p](/natural_language_processing/soundchoice-g2p) | [Hugging Face - speechbrain/soundchoice-g2p](https://huggingface.co/speechbrain/soundchoice-g2p) | Pytorch | 1.2.16 或更高 | |

|[g2p_en](/natural_language_processing/g2p_en) | [g2p_en](https://github.com/Kyubyong/g2p) | Pytorch | 1.2.14 或更高 | |

|[t5_base_japanese_ner](/natural_language_processing/t5_base_japanese_ner) | [t5-japanese](https://github.com/sonoisa/t5-japanese) | Pytorch | 1.2.13 或更高 |

|[japanese-reranker-cross-encoder](/natural_language_processing/japanese-reranker-cross-encoder) | [hotchpotch/japanese-reranker-cross-encoder-large-v1](https://huggingface.co/hotchpotch/japanese-reranker-cross-encoder-large-v1) | Pytorch | 1.2.16 或更高 |

## 网络入侵检测

| Model | 参考资料 | 导出平台 | 支持的 Ailia 版本 | 博客 |

|------------:|:------------:|:------------:|:------------:|:------------:|

| [bert-network-packet-flow-header-payload](/network_intrusion_detection/bert-network-packet-flow-header-payload/) | [bert-network-packet-flow-header-payload](https://huggingface.co/rdpahalavan/bert-network-packet-flow-header-payload)| Pytorch | 1.2.10 或更高 | |

| [falcon-adapter-network-packet](/network_intrusion_detection/falcon-adapter-network-packet/) | [falcon-adapter-network-packet](https://huggingface.co/rdpahalavan/falcon-adapter-network-packet)| Pytorch | 1.2.10 或更高 | |

## 神经渲染

| | Model | 参考资料 | 导出平台 | 支持的 Ailia 版本 | 博客 |

|:-----------|------------:|:------------:|:------------:|:------------:|:------------:|

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/0f455f74-1f46-4bb6-a0b4-d2abb0164621.png" width=128px>](neural_rendering/nerf/) | [nerf](/neural_rendering/nerf/) | [NeRF: Neural Radiance Fields](https://github.com/bmild/nerf) | Tensorflow | 1.2.10 或更高 | [EN](https://medium.com/axinc-ai/nerf-machine-learning-model-to-generate-and-render-3d-models-from-multiple-viewpoint-images-599631dc2075) [JP](https://medium.com/axinc/nerf-%E8%A4%87%E6%95%B0%E3%81%AE%E8%A6%96%E7%82%B9%E3%81%AE%E7%94%BB%E5%83%8F%E3%81%8B%E3%82%893d%E3%83%A2%E3%83%87%E3%83%AB%E3%82%92%E7%94%9F%E6%88%90%E3%81%97%E3%81%A6%E3%83%AC%E3%83%B3%E3%83%80%E3%83%AA%E3%83%B3%E3%82%B0%E3%81%99%E3%82%8B%E6%A9%9F%E6%A2%B0%E5%AD%A6%E7%BF%92%E3%83%A2%E3%83%87%E3%83%AB-2d6bee7ff22f) |

| [<img src="https://yellow-cdn.veclightyear.com/35dd4d3f/e34e7237-6308-4e49-b2a9-ba04fbf94180.gif" width=128px>](neural_rendering/tripo_sr/) | [TripoSR](/neural_rendering/tripo_sr/) | [TripoSR](https://github.com/VAST-AI-Research/TripoSR) | Pytorch | 1.2.6 或更高 |

## 不适宜内容检测

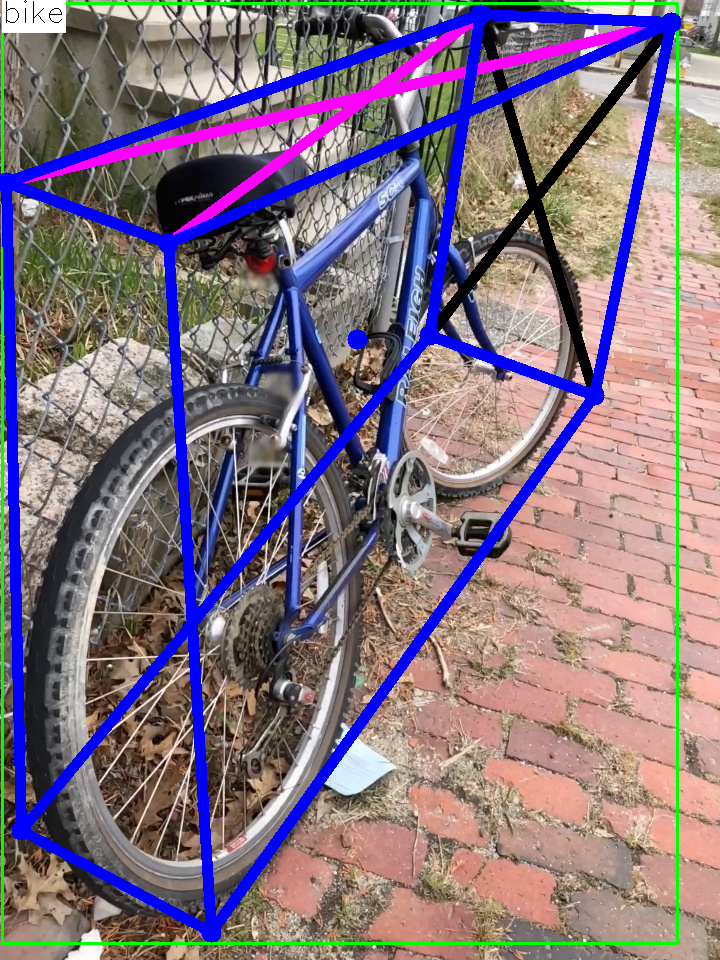

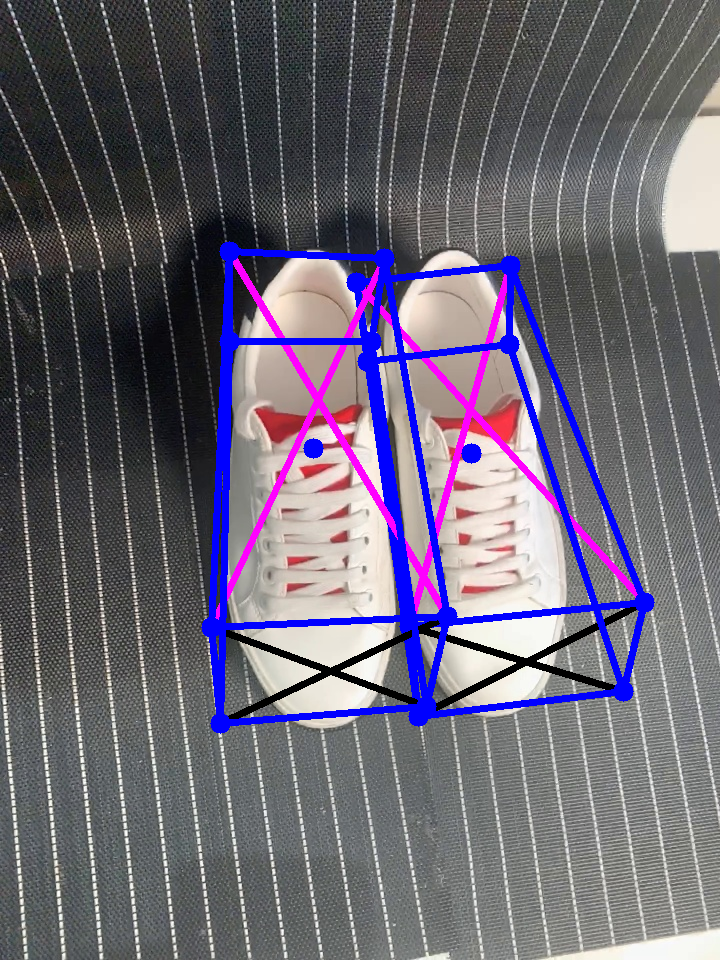

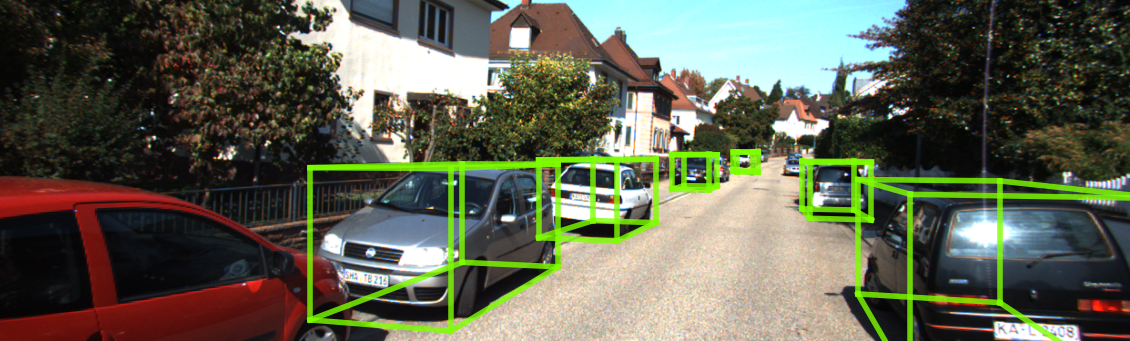

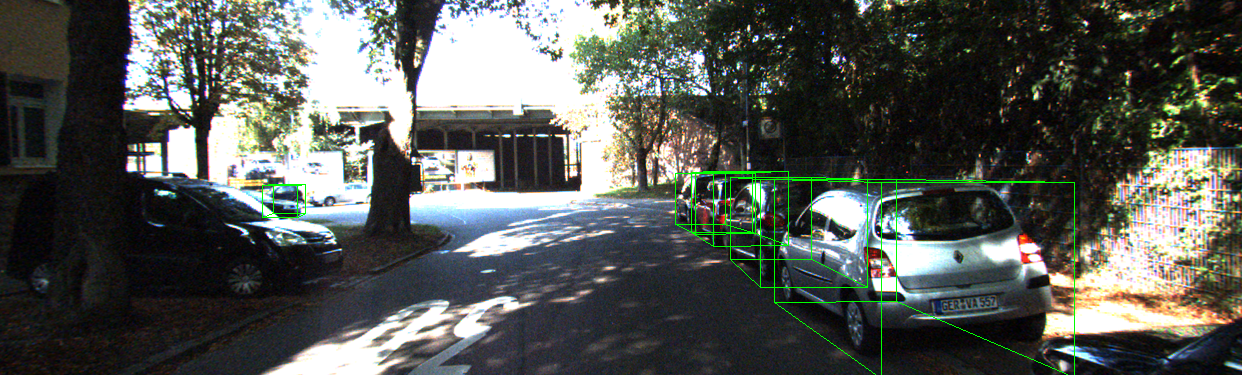

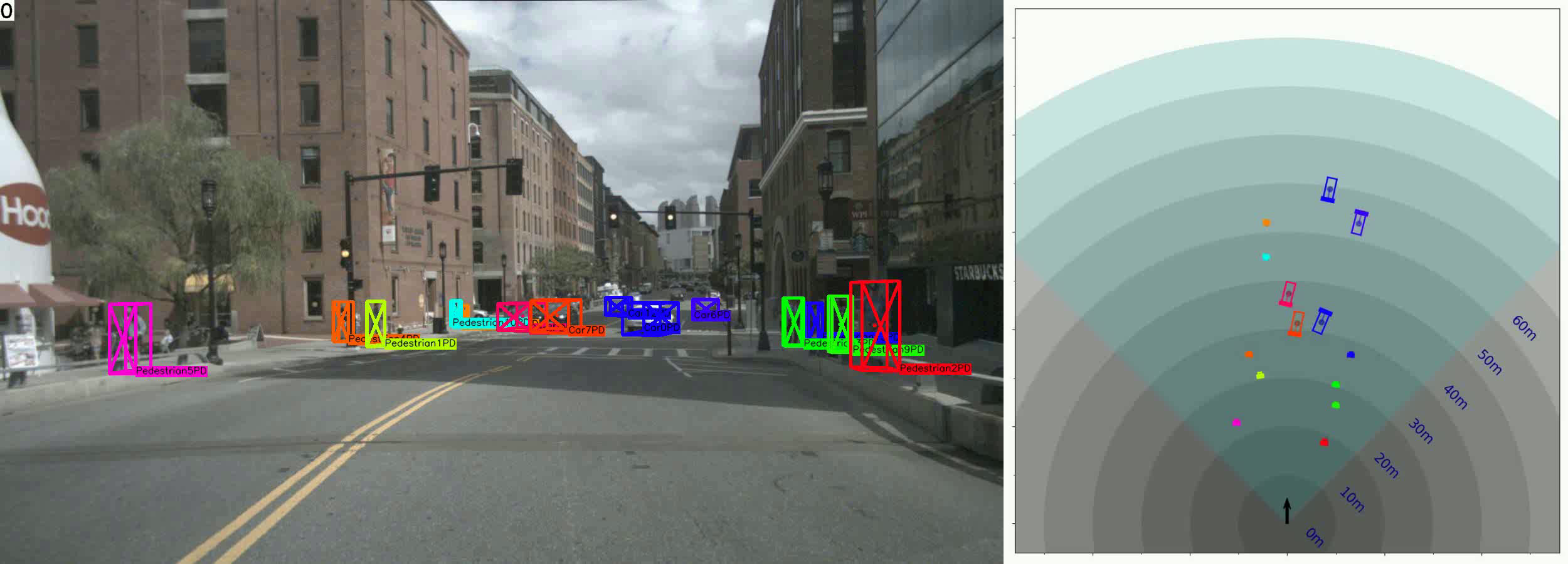

目标检测 3D

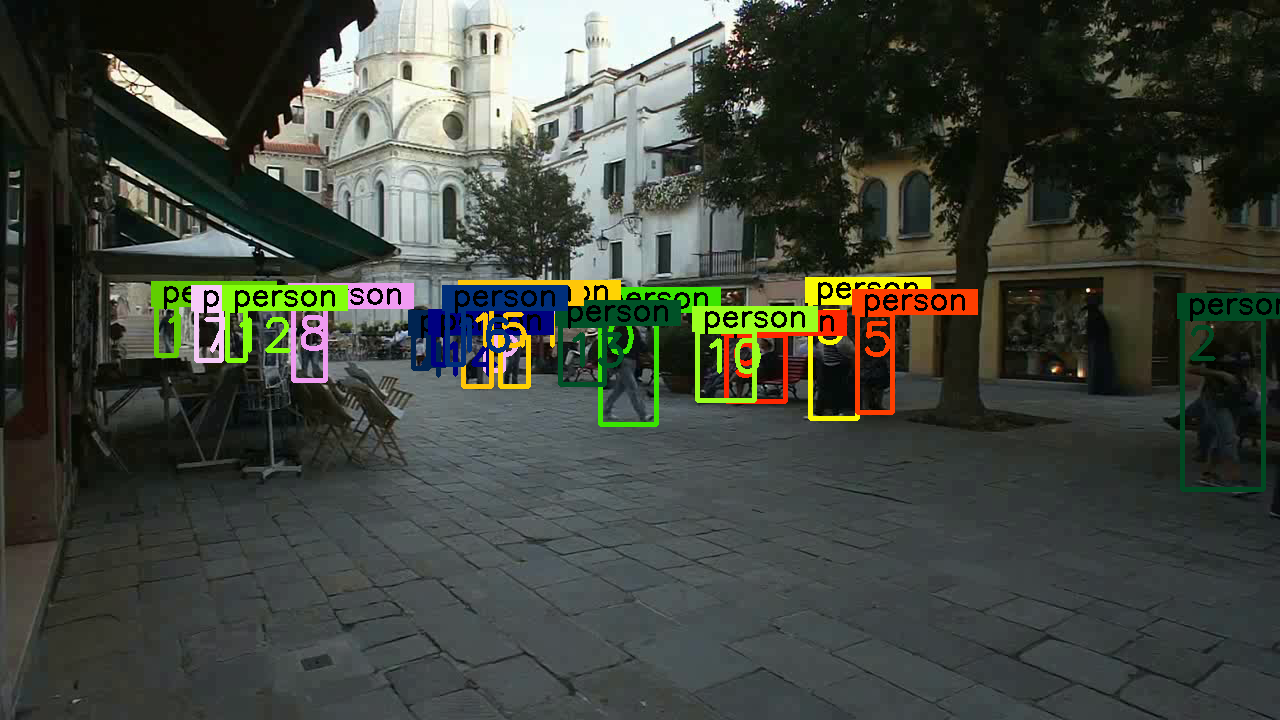

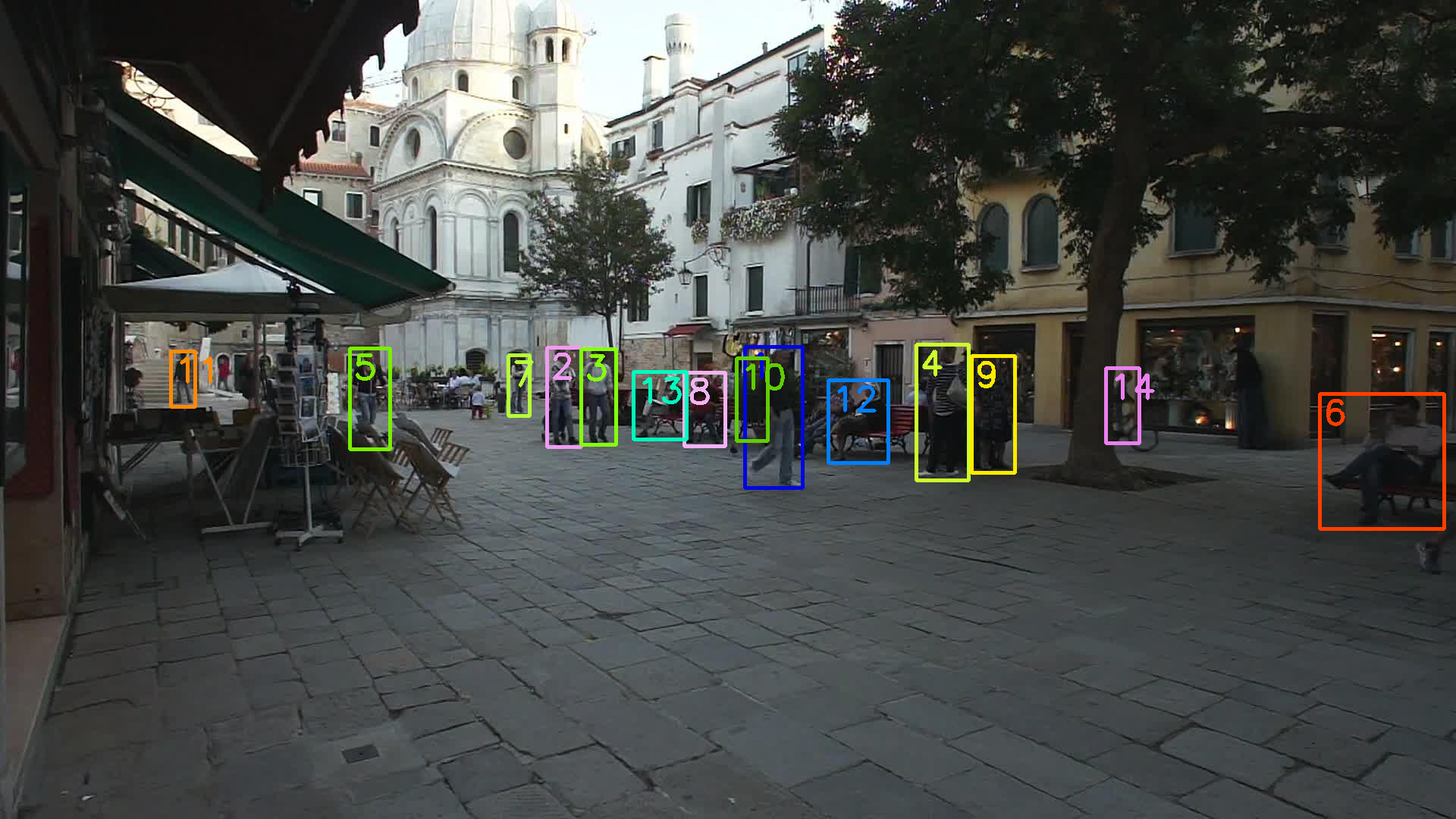

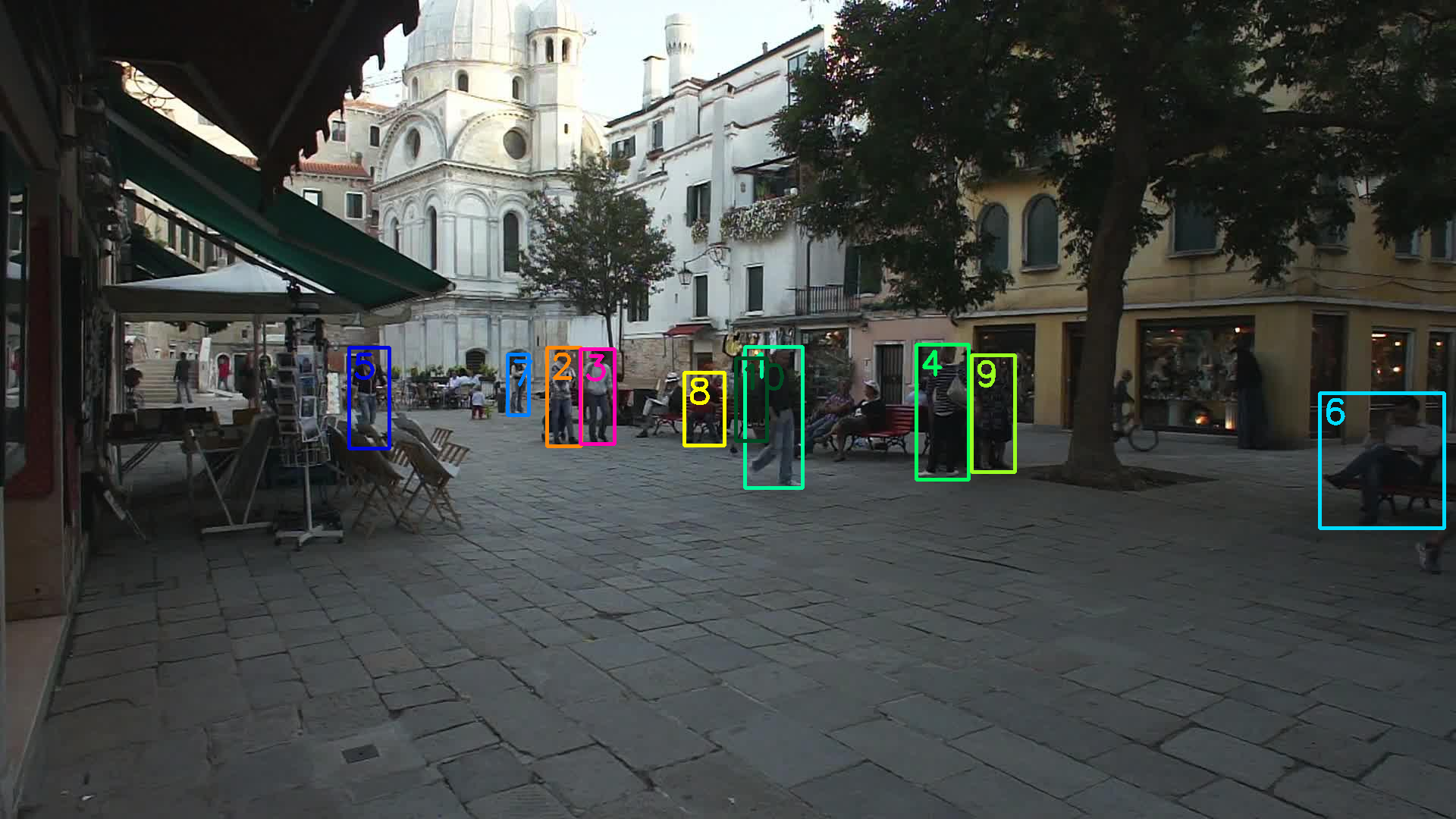

目标跟踪

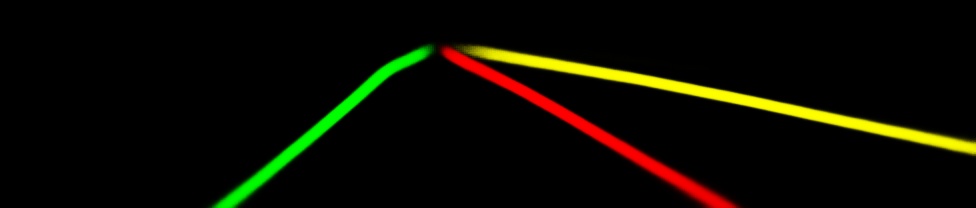

光流估计

点云分割

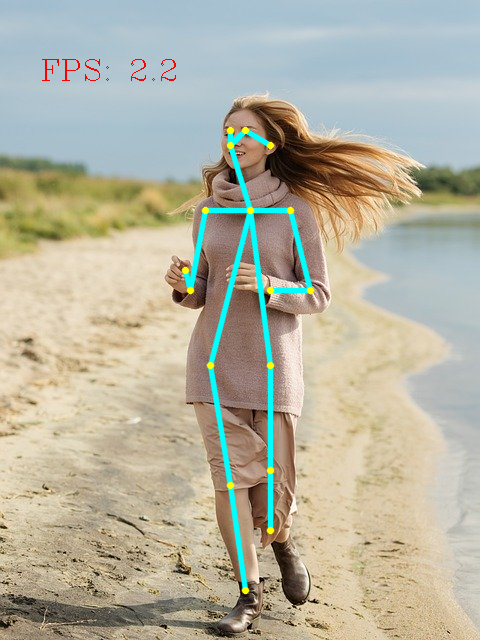

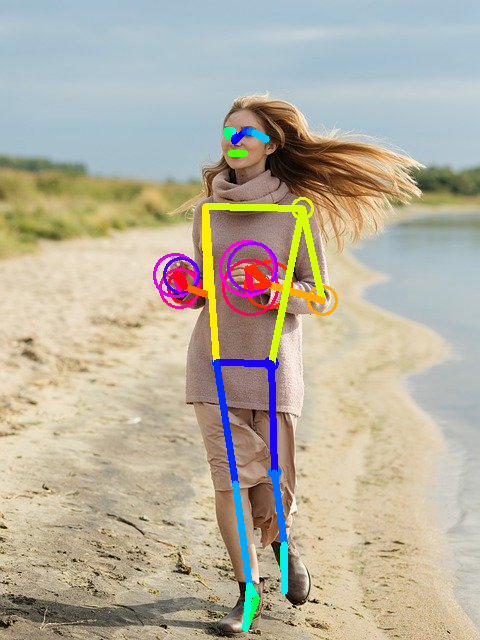

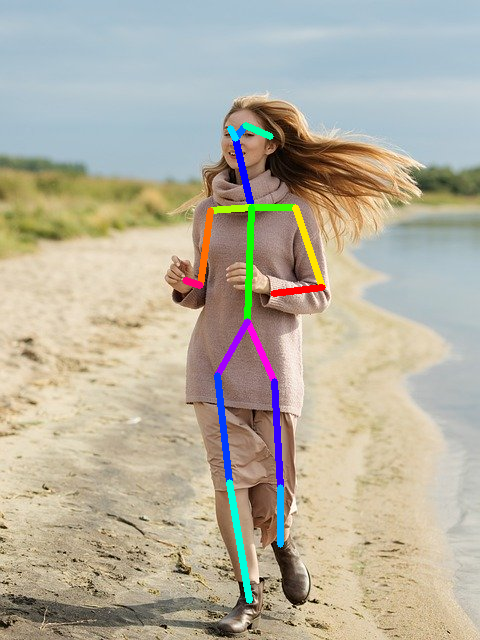

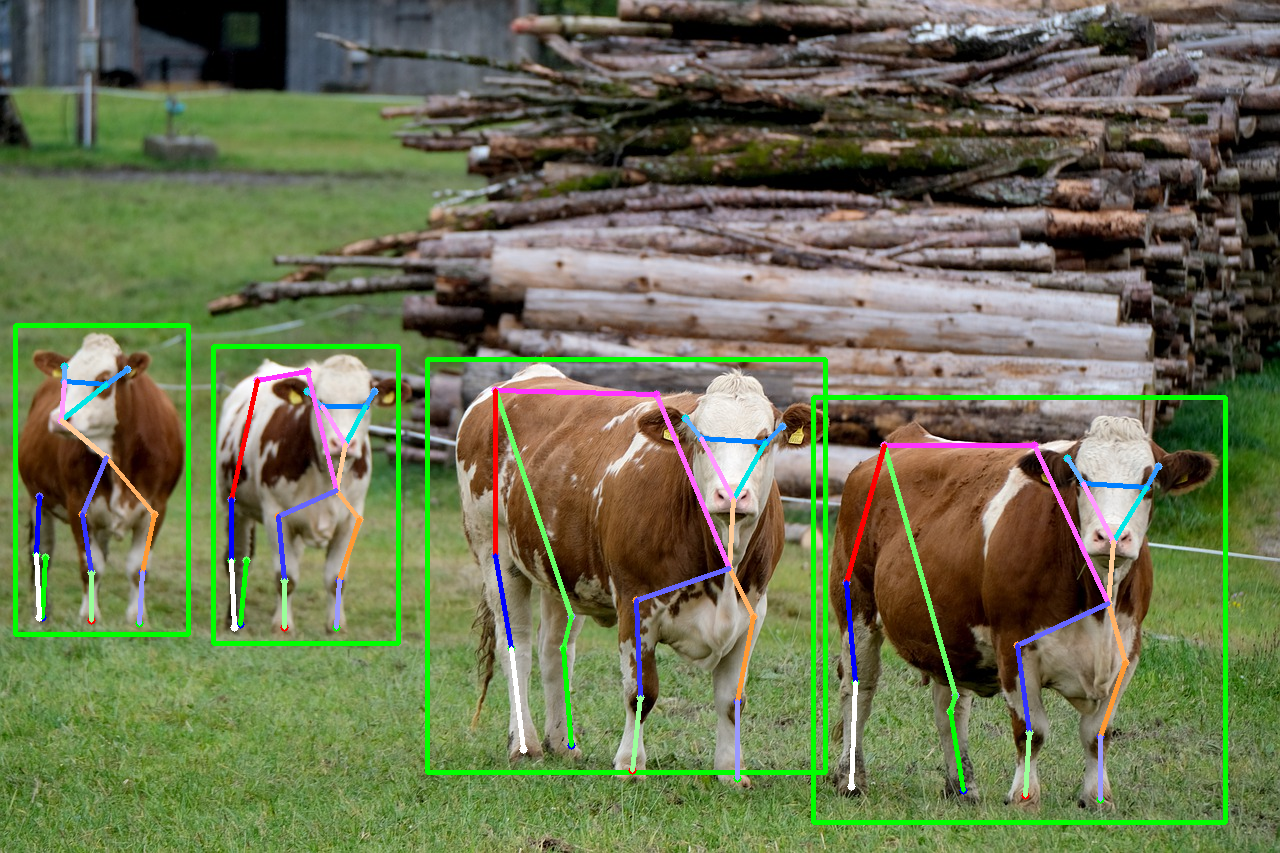

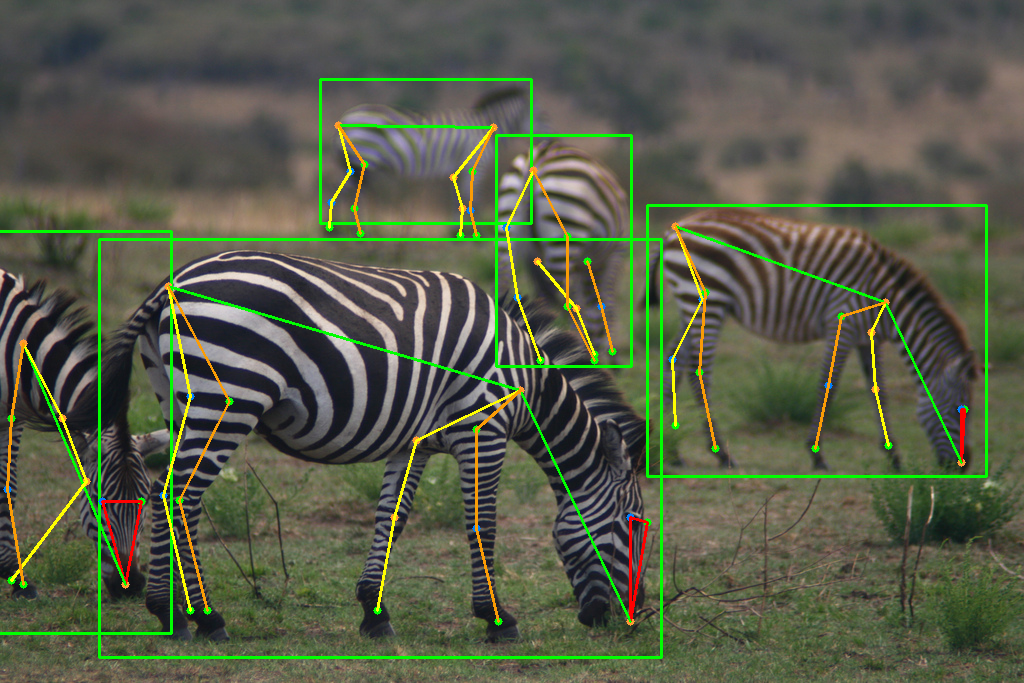

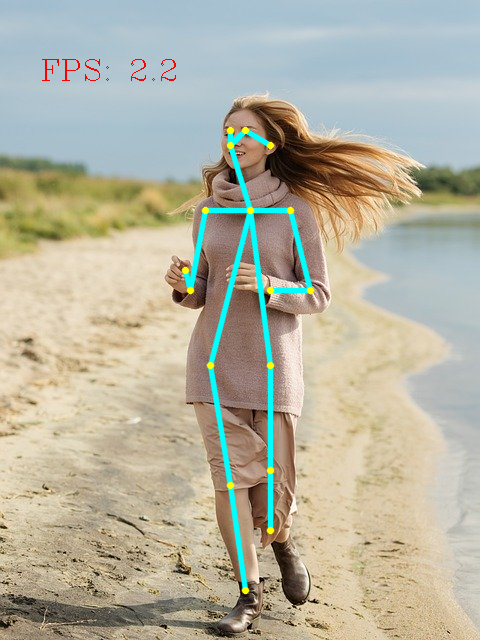

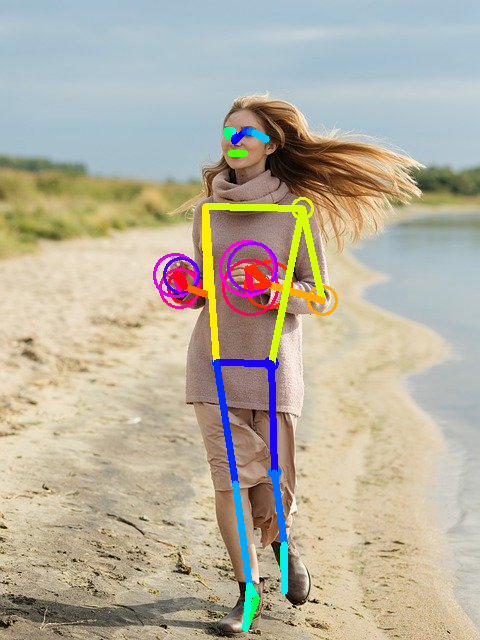

姿态估计

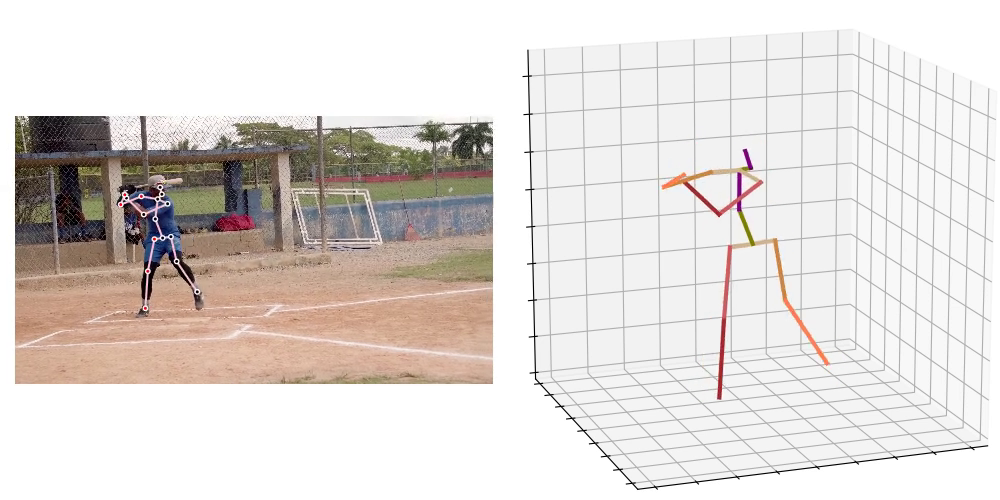

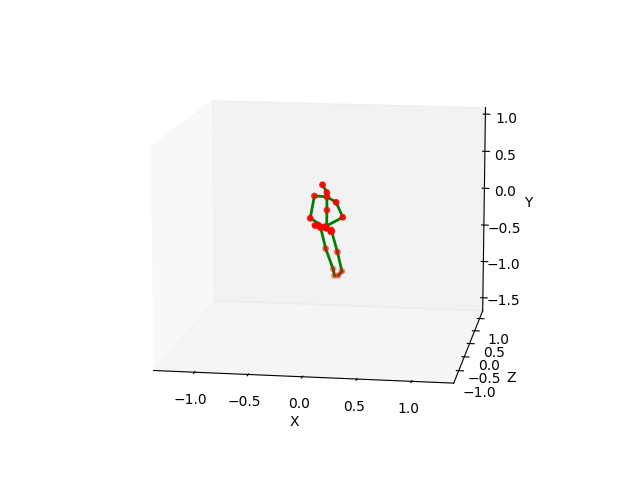

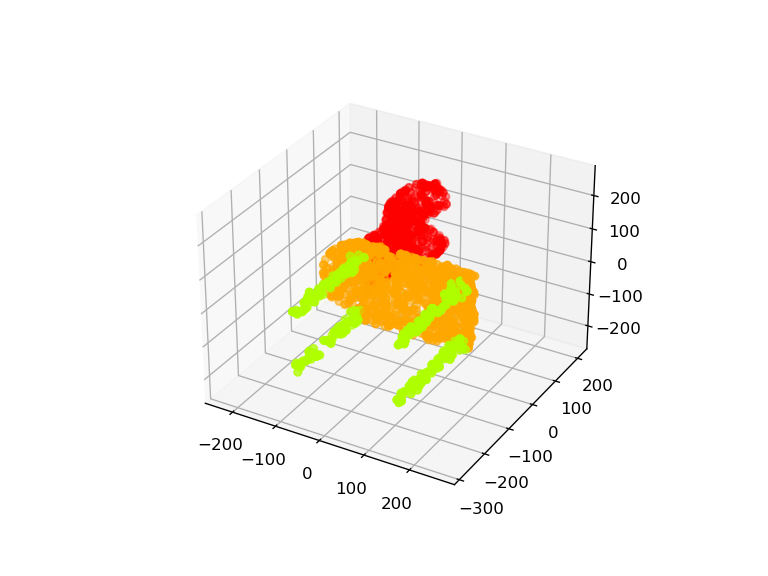

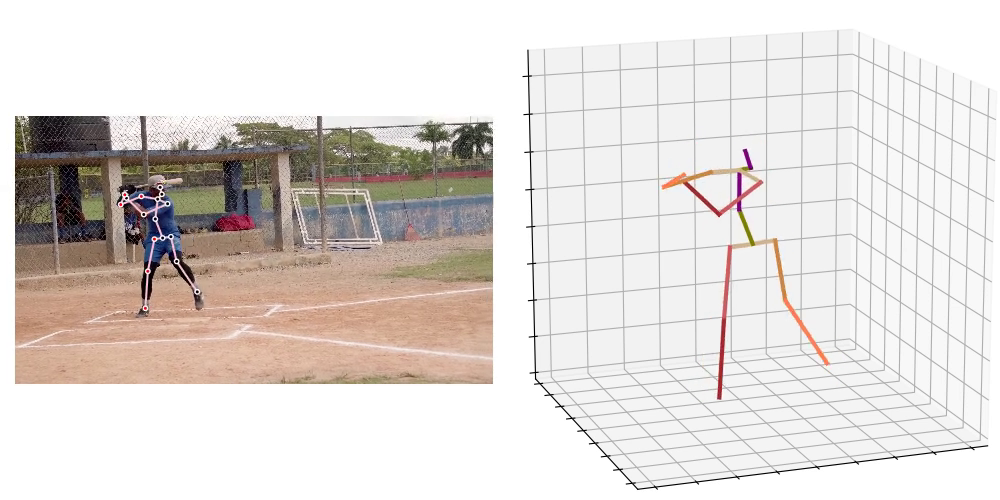

3D姿态估计

| | 模型 | 参考 | 导出自 | 支持的 Ailia 版本 | 博客 |

|:-----------|------------:|:------------:|:------------:|:------------:|:------------:|

|  |lightweight-human-pose-estimation-3d | PyTorch 实时 3D 多人姿态估计 demo。

|lightweight-human-pose-estimation-3d | PyTorch 实时 3D 多人姿态估计 demo。

OpenVINO 后端可用于快速的 CPU 推理。 | Pytorch | 1.2.1及更高 | |

|  |3d-pose-baseline | 一个简单的 TensorFlow 3D 人体姿态估计基线。

|3d-pose-baseline | 一个简单的 TensorFlow 3D 人体姿态估计基线。

在 ICCV 17 上展示。 | TensorFlow | 1.2.3及更高 | |

|  |pose-hg-3d | Towards 3D Human Pose Estimation in the Wild: a Weakly-supervised Approach | Pytorch | 1.2.6及更高 | |

|

|pose-hg-3d | Towards 3D Human Pose Estimation in the Wild: a Weakly-supervised Approach | Pytorch | 1.2.6及更高 | |

|  |blazepose-fullbody | MediaPipe | TensorFlow Lite | 1.2.5及更高 | EN JP |

|

|blazepose-fullbody | MediaPipe | TensorFlow Lite | 1.2.5及更高 | EN JP |

|  |3dmppe_posenet | 来自单个 RGB 图像的 3D 多人姿态估计的“相机距离感知自上而下方法”的 PoseNet | Pytorch | 1.2.6及更高 | |

|

|3dmppe_posenet | 来自单个 RGB 图像的 3D 多人姿态估计的“相机距离感知自上而下方法”的 PoseNet | Pytorch | 1.2.6及更高 | |

|  |gast | 视频中 3D 人体姿态估计的图注意空间时间卷积网络 (GAST-Net) | Pytorch | 1.2.7及更高 | EN JP |

|

|gast | 视频中 3D 人体姿态估计的图注意空间时间卷积网络 (GAST-Net) | Pytorch | 1.2.7及更高 | EN JP |

|  |mediapipe_pose_world_landmarks | MediaPipe 实际坐标点 | TensorFlow Lite | 1.2.10及更高 | |

|mediapipe_pose_world_landmarks | MediaPipe 实际坐标点 | TensorFlow Lite | 1.2.10及更高 | |

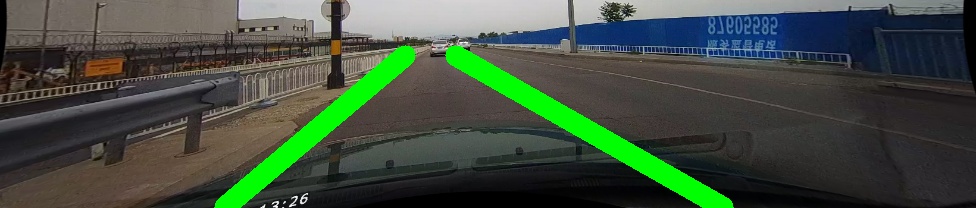

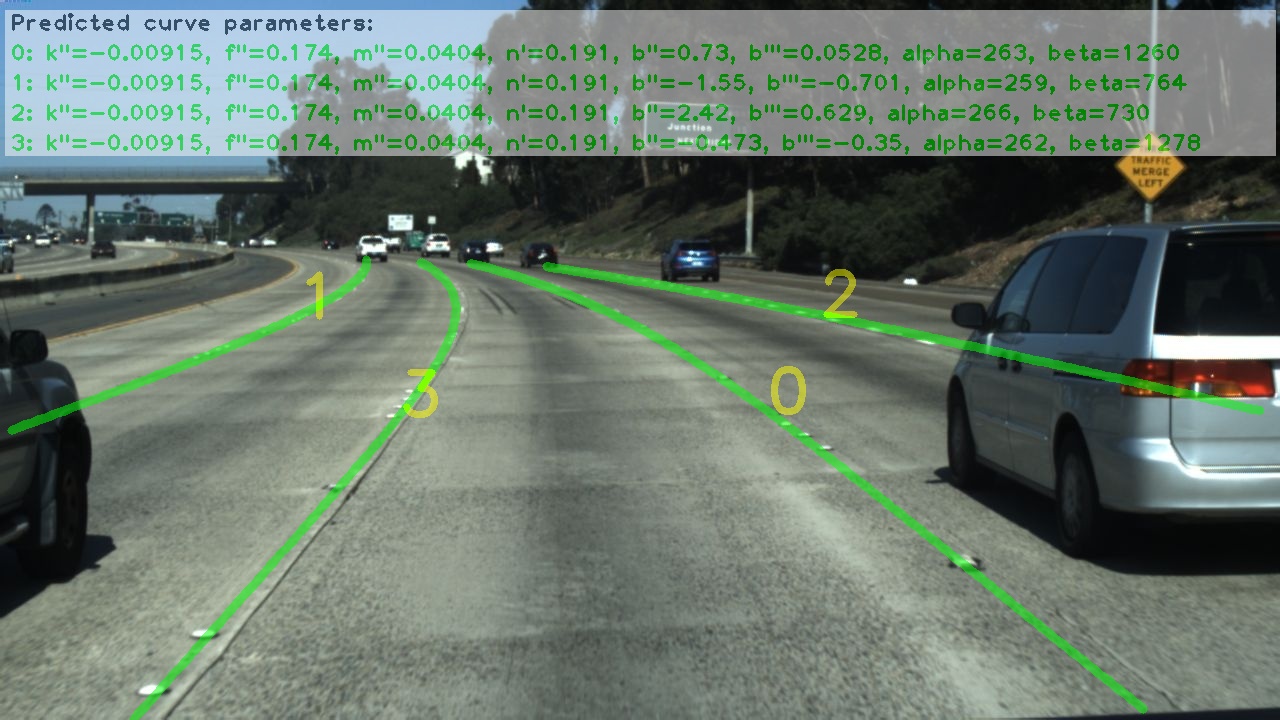

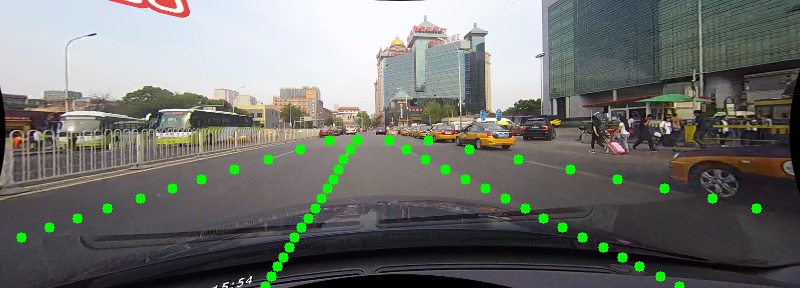

道路检测

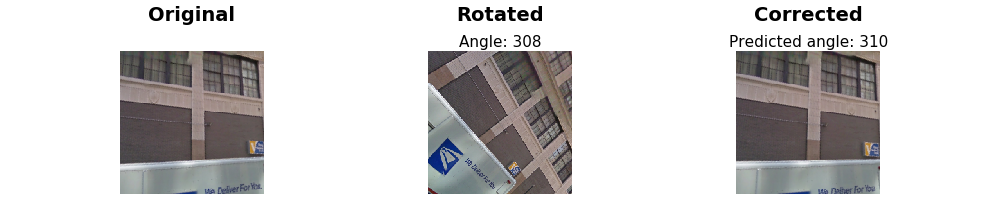

旋转预测

样式迁移

超分辨率

文本检测

文本识别

时间序列预测

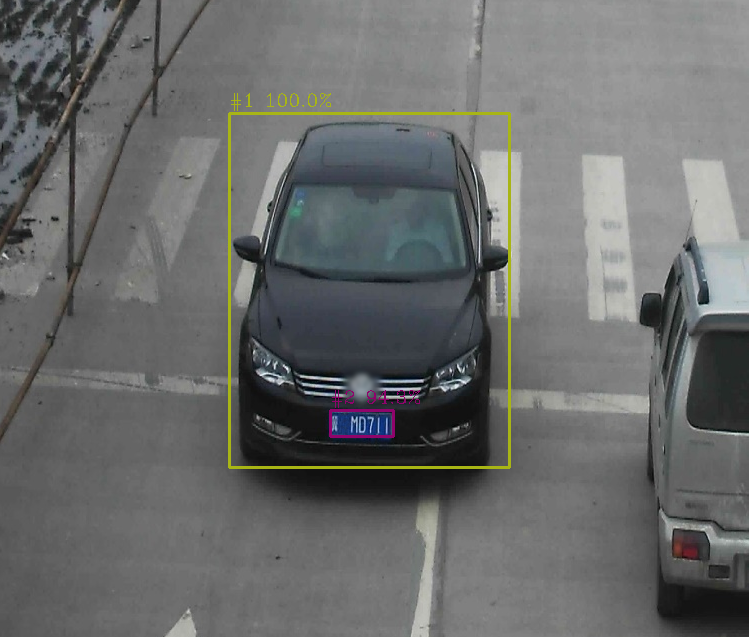

车辆识别

商业模型

其他语言

|lightweight-human-pose-estimation-3d | PyTorch 实时 3D 多人姿态估计 demo。

|lightweight-human-pose-estimation-3d | PyTorch 实时 3D 多人姿态估计 demo。 |3d-pose-baseline | 一个简单的 TensorFlow 3D 人体姿态估计基线。

|3d-pose-baseline | 一个简单的 TensorFlow 3D 人体姿态估计基线。 |pose-hg-3d | Towards 3D Human Pose Estimation in the Wild: a Weakly-supervised Approach | Pytorch | 1.2.6及更高 | |

|

|pose-hg-3d | Towards 3D Human Pose Estimation in the Wild: a Weakly-supervised Approach | Pytorch | 1.2.6及更高 | |

|  |blazepose-fullbody | MediaPipe | TensorFlow Lite | 1.2.5及更高 | EN JP |

|

|blazepose-fullbody | MediaPipe | TensorFlow Lite | 1.2.5及更高 | EN JP |

|  |3dmppe_posenet | 来自单个 RGB 图像的 3D 多人姿态估计的“相机距离感知自上而下方法”的 PoseNet | Pytorch | 1.2.6及更高 | |

|

|3dmppe_posenet | 来自单个 RGB 图像的 3D 多人姿态估计的“相机距离感知自上而下方法”的 PoseNet | Pytorch | 1.2.6及更高 | |

|  |gast | 视频中 3D 人体姿态估计的图注意空间时间卷积网络 (GAST-Net) | Pytorch | 1.2.7及更高 | EN JP |

|

|gast | 视频中 3D 人体姿态估计的图注意空间时间卷积网络 (GAST-Net) | Pytorch | 1.2.7及更高 | EN JP |

|  |mediapipe_pose_world_landmarks | MediaPipe 实际坐标点 | TensorFlow Lite | 1.2.10及更高 | |

|mediapipe_pose_world_landmarks | MediaPipe 实际坐标点 | TensorFlow Lite | 1.2.10及更高 | |

访问官网

访问官网 Github

Github 文档

文档