SymbolicAI

A Neuro-Symbolic Perspective on Large Language Models (LLMs)

Building applications with LLMs at the core using our Symbolic API facilitates the integration of classical and differentiable programming in Python.

Read full paper here.

Read further documentation here.

Abstract

Conceptually, SymbolicAI is a framework that leverages machine learning – specifically LLMs – as its foundation, and composes operations based on task-specific prompting. We adopt a divide-and-conquer approach to break down a complex problem into smaller, more manageable problems. Consequently, each operation addresses a simpler task. By reassembling these operations, we can resolve the complex problem. Moreover, our design principles enable us to transition seamlessly between differentiable and classical programming, allowing us to harness the power of both paradigms.

Tutorials

📖 Table of Contents

- SymbolicAI

- A Neuro-Symbolic Perspective on Large Language Models (LLMs)

- Abstract

- Tutorials

- 📖 Table of Contents

- 🔧 Get Started

- 🦖 Apps

- 🤷♂️ Why SymbolicAI?

- Tell me some more fun facts!

- How Does it Work?

- Operations

- Prompt Design

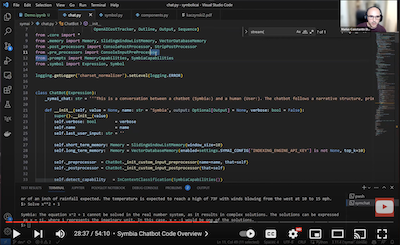

- 😑 Expressions

- ❌ Error Handling

- 🕷️ Interpretability, Testing & Debugging

- ▶️ Experiment with Our API

- 📈 Interface for Query and Response Inspection

- 🤖 Engines

- ⚡Limitations

- 🥠 Future Work

- Conclusion

- 👥 References, Related Work, and Credits

🔧 Get Started

➡️ Quick Install

pip install symbolicai

One can run our framework in two ways:

- using local engines (

experimental) that are run on your local machine (see Local Neuro-Symbolic Engine section), or - using engines powered by external APIs, i.e. using OpenAI's API (see API Keys).

API Keys

Before the first run, define exports for the required API keys to enable the respective engines. This will register the keys in internally for subsequent runs. By default SymbolicAI currently uses OpenAI's neural engines, i.e. GPT-3 Davinci-003, DALL·E 2 and Embedding Ada-002, for the neuro-symbolic computations, image generation and embeddings computation respectively. However, these modules can easily be replaced with open-source alternatives. Examples are

- OPT or Bloom for neuro-symbolic computations,

- Craiyon for image generation,

- and any BERT variants for semantic embedding computations.

To set the OpenAI API Keys use the following command:

# Linux / MacOS

export OPENAI_API_KEY="<OPENAI_API_KEY>"

# Windows (PowerShell)

$Env:OPENAI_API_KEY="<OPENAI_API_KEY>"

# Jupyter Notebooks (important: do not use quotes)

%env OPENAI_API_KEY=<OPENAI_API_KEY>

To get started import our library by using:

import symai as ai

Overall, the following engines are currently supported:

- Neuro-Symbolic Engine: OpenAI's LLMs (supported GPT-3, ChatGPT, GPT-4) (as an experimental alternative using llama.cpp for local models)

- Embedding Engine: OpenAI's Embedding API

- [Optional] Symbolic Engine: WolframAlpha

- [Optional] Search Engine: SerpApi

- [Optional] OCR Engine: APILayer

- [Optional] SpeechToText Engine: OpenAI's Whisper

- [Optional] WebCrawler Engine: Selenium

- [Optional] Image Rendering Engine: DALL·E 2

- [Optional] Indexing Engine: Pinecone

- [Optional] CLIP Engine: 🤗 Hugging Face (experimental image and text embeddings)

[Optional] Installs

SymbolicAI uses multiple engines to process text, speech and images. We also include search engine access to retrieve information from the web. To use all of them, you will need to install also the following dependencies or assign the API keys to the respective engines.

If you want to use the WolframAlpha Engine, Search Engine or OCR Engine you will need to export the following API keys:

# Linux / MacOS

export SYMBOLIC_ENGINE_API_KEY="<WOLFRAMALPHA_API_KEY>"

export SEARCH_ENGINE_API_KEY="<SERP_API_KEY>"

export OCR_ENGINE_API_KEY="<APILAYER_API_KEY>"

export INDEXING_ENGINE_API_KEY="<PINECONE_API_KEY>"

# Windows (PowerShell)

$Env:SYMBOLIC_ENGINE_API_KEY="<WOLFRAMALPHA_API_KEY>"

$Env:SEARCH_ENGINE_API_KEY="<SERP_API_KEY>"

$Env:OCR_ENGINE_API_KEY="<APILAYER_API_KEY>"

$Env:INDEXING_ENGINE_API_KEY="<PINECONE_API_KEY>"

To use the optional engines, install the respective extras:

pip install "symbolicai[wolframalpha]"

pip install "symbolicai[whisper]"

pip install "symbolicai[selenium]"

pip install "symbolicai[serpapi]"

pip install "symbolicai[pinecone]"

Or, install all optional dependencies at once:

pip install "symbolicai[all]"

[Note] Additionally, you need to install the respective codecs.

- SpeechToText Engine:

ffmpegfor audio processing (based on OpenAI's whisper)

# Linux

sudo apt update && sudo apt install ffmpeg

# MacOS

brew install ffmpeg

# Windows

choco install ffmpeg

- WebCrawler Engine: For

selenium, we automatically install the driver withchromedriver-autoinstaller. Currently we only support Chrome as the default browser.

Alternatively, you can specify in your project path a symai.config.json file with all the engine properties. This will replace the environment variables. See the following configuration file as an example:

{

"NEUROSYMBOLIC_ENGINE_API_KEY": "<OPENAI_API_KEY>",

"NEUROSYMBOLIC_ENGINE_MODEL": "text-davinci-003",

"SYMBOLIC_ENGINE_API_KEY": "<WOLFRAMALPHA_API_KEY>",

"EMBEDDING_ENGINE_API_KEY": "<OPENAI_API_KEY>",

"EMBEDDING_ENGINE_MODEL": "text-embedding-ada-002",

"IMAGERENDERING_ENGINE_API_KEY": "<OPENAI_API_KEY>",

"VISION_ENGINE_MODEL": "openai/clip-vit-base-patch32",

"SEARCH_ENGINE_API_KEY": "<SERP_API_KEY>",

"SEARCH_ENGINE_MODEL": "google",

"OCR_ENGINE_API_KEY": "<APILAYER_API_KEY>",

"SPEECH_TO_TEXT_ENGINE_MODEL": "base",

"TEXT_TO_SPEECH_ENGINE_MODEL": "tts-1",

"INDEXING_ENGINE_API_KEY": "<PINECONE_API_KEY>",

"INDEXING_ENGINE_ENVIRONMENT": "us-west1-gcp",

"COLLECTION_DB": "ExtensityAI",

"COLLECTION_STORAGE": "SymbolicAI",

"SUPPORT_COMMUNITY": False

}

[NOTE]: Our framework allows you to support us train models for local usage by enabling the data collection feature. On application startup we show the terms of services and you can activate or disable this community feature. We do not share or sell your data to 3rd parties and only use the data for research purposes and to improve your user experience. To change this setting you will be prompted with in our setup wizard to enable or disable community support or you can go to the

symai.config.jsonfile located in your home directory of your.symaifolder (i.e.,~/.symai/symai.config.json), and turn it on/off by setting theSUPPORT_COMMUNITYproperty toTrue/Falsevia the config file or the respective environment variable. [NOTE]: By default, the user warnings are enabled. To disable them, exportSYMAI_WARNINGS=0in your environment variables.

🦖 Apps

We provide a set of useful tools that demonstrate how to interact with our framework and enable package manage. You can access these apps by calling the sym+<shortcut-name-of-app> command in your terminal or PowerShell.

Shell Command Tool

The Shell Command Tool is a basic shell command support tool that translates natural language commands into shell commands. To start the Shell Command Tool, simply run:

symsh

Github

Github 文档

文档 论文

论文