用于表格数据生成的GAN、TimeGAN、扩散模型和大型语言模型

生成对抗网络以其在生成逼真图像方面的成功而闻名。然而,它们也可以用于生成表格数据。这里将给你机会尝试其中的一些方法。

生成对抗网络以其在生成逼真图像方面的成功而闻名。然而,它们也可以用于生成表格数据。这里将给你机会尝试其中的一些方法。

- Arxiv文章:"用于不均匀分布的表格GAN"

- Medium文章:用于表格数据的GAN

如何使用库

- 安装:

pip install tabgan - 要通过采样然后通过对抗训练进行过滤来生成新的训练数据,调用

GANGenerator().generate_data_pipe:

from tabgan.sampler import OriginalGenerator, GANGenerator, ForestDiffusionGenerator, LLMGenerator

import pandas as pd

import numpy as np

# 随机输入数据

train = pd.DataFrame(np.random.randint(-10, 150, size=(150, 4)), columns=list("ABCD"))

target = pd.DataFrame(np.random.randint(0, 2, size=(150, 1)), columns=list("Y"))

test = pd.DataFrame(np.random.randint(0, 100, size=(100, 4)), columns=list("ABCD"))

# 生成数据

new_train1, new_target1 = OriginalGenerator().generate_data_pipe(train, target, test, )

new_train2, new_target2 = GANGenerator(gen_params={"batch_size": 500, "epochs": 10, "patience": 5 }).generate_data_pipe(train, target, test, )

new_train3, new_target3 = ForestDiffusionGenerator().generate_data_pipe(train, target, test, )

new_train4, new_target4 = LLMGenerator(gen_params={"batch_size": 32,

"epochs": 4, "llm": "distilgpt2", "max_length": 500}).generate_data_pipe(train, target, test, )

# 定义所有参数的示例

new_train4, new_target4 = GANGenerator(gen_x_times=1.1, cat_cols=None,

bot_filter_quantile=0.001, top_filter_quantile=0.999, is_post_process=True,

adversarial_model_params={

"metrics": "AUC", "max_depth": 2, "max_bin": 100,

"learning_rate": 0.02, "random_state": 42, "n_estimators": 100,

}, pregeneration_frac=2, only_generated_data=False,

gen_params = {"batch_size": 500, "patience": 25, "epochs" : 500,}).generate_data_pipe(train, target,

test, deep_copy=True, only_adversarial=False, use_adversarial=True)

所有采样器OriginalGenerator、ForestDiffusionGenerator、LLMGenerator和GANGenerator都具有相同的输入参数。

- GANGenerator基于CTGAN

- ForestDiffusionGenerator基于Forest Diffusion (表格扩散和流匹配)

- LLMGenerator基于语言模型是真实的表格数据生成器(GReaT框架)

- gen_x_times: float = 1.1 - 生成多少数据,由于后处理和对抗过滤,输出可能会更少

- cat_cols: list = None - 分类列

- bot_filter_quantile: float = 0.001 - 后处理过滤的底部分位数

- top_filter_quantile: float = 0.999 - 后处理过滤的顶部分位数

- is_post_process: bool = True - 是否执行后处理过滤,如果为false则忽略bot_filter_quantile和top_filter_quantile

- adversarial_model_params: 对抗过滤模型的字典参数,二元任务的默认值

- pregeneration_frac: float = 2 - 对于生成步骤,将生成gen_x_times * pregeneration_frac数量的数据。但在后处理中将返回原始数据的(1 + gen_x_times)%

- gen_params: GAN训练的字典参数

generate_data_pipe方法的参数:

- train_df: pd.DataFrame 具有单独目标的训练数据框

- target: pd.DataFrame 训练数据集的输入目标

- test_df: pd.DataFrame 测试数据框 - 新生成的训练数据框应接近它

- deep_copy: bool = True - 是否复制输入文件。如果不复制,输入数据框将被覆盖

- only_adversarial: bool = False - 只对训练数据框执行对抗过滤

- use_adversarial: bool = True - 是否执行对抗过滤

- only_generated_data: bool = False - 生成后只获取新生成的数据,不与输入训练数据框连接

- @return: -> Tuple[pd.DataFrame, pd.DataFrame] - 新生成的训练数据框和测试数据

因此,你可以使用这个库来提高你的数据集质量:

def fit_predict(clf, X_train, y_train, X_test, y_test):

clf.fit(X_train, y_train)

return sklearn.metrics.roc_auc_score(y_test, clf.predict_proba(X_test)[:, 1])

dataset = sklearn.datasets.load_breast_cancer()

clf = sklearn.ensemble.RandomForestClassifier(n_estimators=25, max_depth=6)

X_train, X_test, y_train, y_test = sklearn.model_selection.train_test_split(

pd.DataFrame(dataset.data), pd.DataFrame(dataset.target, columns=["target"]), test_size=0.33, random_state=42)

print("初始指标", fit_predict(clf, X_train, y_train, X_test, y_test))

new_train1, new_target1 = OriginalGenerator().generate_data_pipe(X_train, y_train, X_test, )

print("OriginalGenerator指标", fit_predict(clf, new_train1, new_target1, X_test, y_test))

new_train1, new_target1 = GANGenerator().generate_data_pipe(X_train, y_train, X_test, )

print("GANGenerator指标", fit_predict(clf, new_train1, new_target1, X_test, y_test))

时间序列GAN生成 TimeGAN

你可以轻松调整代码以生成多维时间序列数据。 基本上它从_date_中提取天、月和年。以下示例演示如何使用:

import pandas as pd

import numpy as np

from tabgan.utils import get_year_mnth_dt_from_date,make_two_digit,collect_dates

from tabgan.sampler import OriginalGenerator, GANGenerator

train_size = 100

train = pd.DataFrame(

np.random.randint(-10, 150, size=(train_size, 4)), columns=list("ABCD")

)

min_date = pd.to_datetime('2019-01-01')

max_date = pd.to_datetime('2021-12-31')

d = (max_date - min_date).days + 1

train['Date'] = min_date + pd.to_timedelta(pd.np.random.randint(d, size=train_size), unit='d')

train = get_year_mnth_dt_from_date(train, 'Date')

new_train, new_target = GANGenerator(gen_x_times=1.1, cat_cols=['year'], bot_filter_quantile=0.001,

top_filter_quantile=0.999,

is_post_process=True, pregeneration_frac=2, only_generated_data=False).

generate_data_pipe(train.drop('Date', axis=1), None,

train.drop('Date', axis=1)

)

new_train = collect_dates(new_train)

实验

数据集和实验设计

检查数据生成质量 只需使用内置函数

compare_dataframes(original_df, generated_df) # 返回0到1之间的值

运行实验

按照以下步骤运行实验:

- 克隆仓库。所有所需的数据集都存储在

./Research/data文件夹中 - 安装依赖

pip install -r requirements.txt - 运行所有实验

python ./Research/run_experiment.py。运行所有实验python run_experiment.py。你可以添加更多数据集,调整验证类型和分类编码器。 - 在控制台或

./Research/results/fit_predict_scores.txt中查看所有实验的指标

实验设计

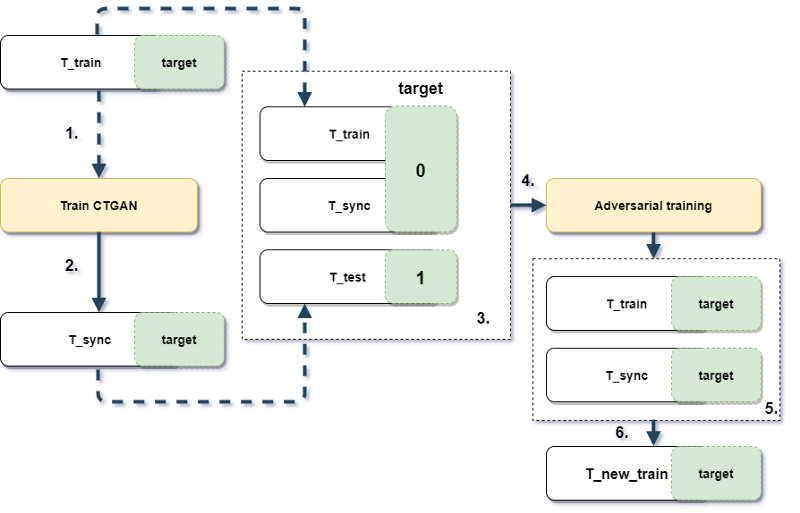

图1.1 实验设计和工作流程

结果

为确定最佳采样策略,对每个数据集的ROC AUC分数进行缩放(最小-最大缩放),然后在数据集间取平均值。

表1.2 不同数据集的采样结果,越高越好(100% - 每个数据集的最大ROC AUC)

| 数据集名称 | 无 | GAN | 原始采样 |

|---|---|---|---|

| credit | 0.997 | 0.998 | 0.997 |

| employee | 0.986 | 0.966 | 0.972 |

| mortgages | 0.984 | 0.964 | 0.988 |

| poverty_A | 0.937 | 0.950 | 0.933 |

| taxi | 0.966 | 0.938 | 0.987 |

| adult | 0.995 | 0.967 | 0.998 |

引用

如果您在科学出版物中使用GAN-for-tabular-data,我们将感谢您引用以下BibTex条目: arxiv出版物:

@misc{ashrapov2020tabular,

title={Tabular GANs for uneven distribution},

author={Insaf Ashrapov},

year={2020},

eprint={2010.00638},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

参考文献

[1] Lei Xu LIDS, Kalyan Veeramachaneni. Synthesizing Tabular Data using Generative Adversarial Networks (2018). arXiv: 1811.11264v1 [cs.LG]

[2] Alexia Jolicoeur-Martineau and Kilian Fatras and Tal Kachman. Generating and Imputing Tabular Data via Diffusion and Flow-based Gradient-Boosted Trees ((2023) https://github.com/SamsungSAILMontreal/ForestDiffusion [cs.LG]

[3] Lei Xu, Maria Skoularidou, Alfredo Cuesta-Infante, Kalyan Veeramachaneni. Modeling Tabular data using Conditional GAN. NeurIPS, (2019)

[4] Vadim Borisov and Kathrin Sessler and Tobias Leemann and Martin Pawelczyk and Gjergji Kasneci. Language Models are Realistic Tabular Data Generators. ICLR, (2023)

Github

Github 文档

文档 论文

论文