Scattertext 0.2.1

A tool for finding distinguishing terms in corpora and displaying them in an interactive HTML scatter plot. Points corresponding to terms are selectively labeled so that they don't overlap with other labels or points.

Cite as: Jason S. Kessler. Scattertext: a Browser-Based Tool for Visualizing how Corpora Differ. ACL System Demonstrations. 2017.

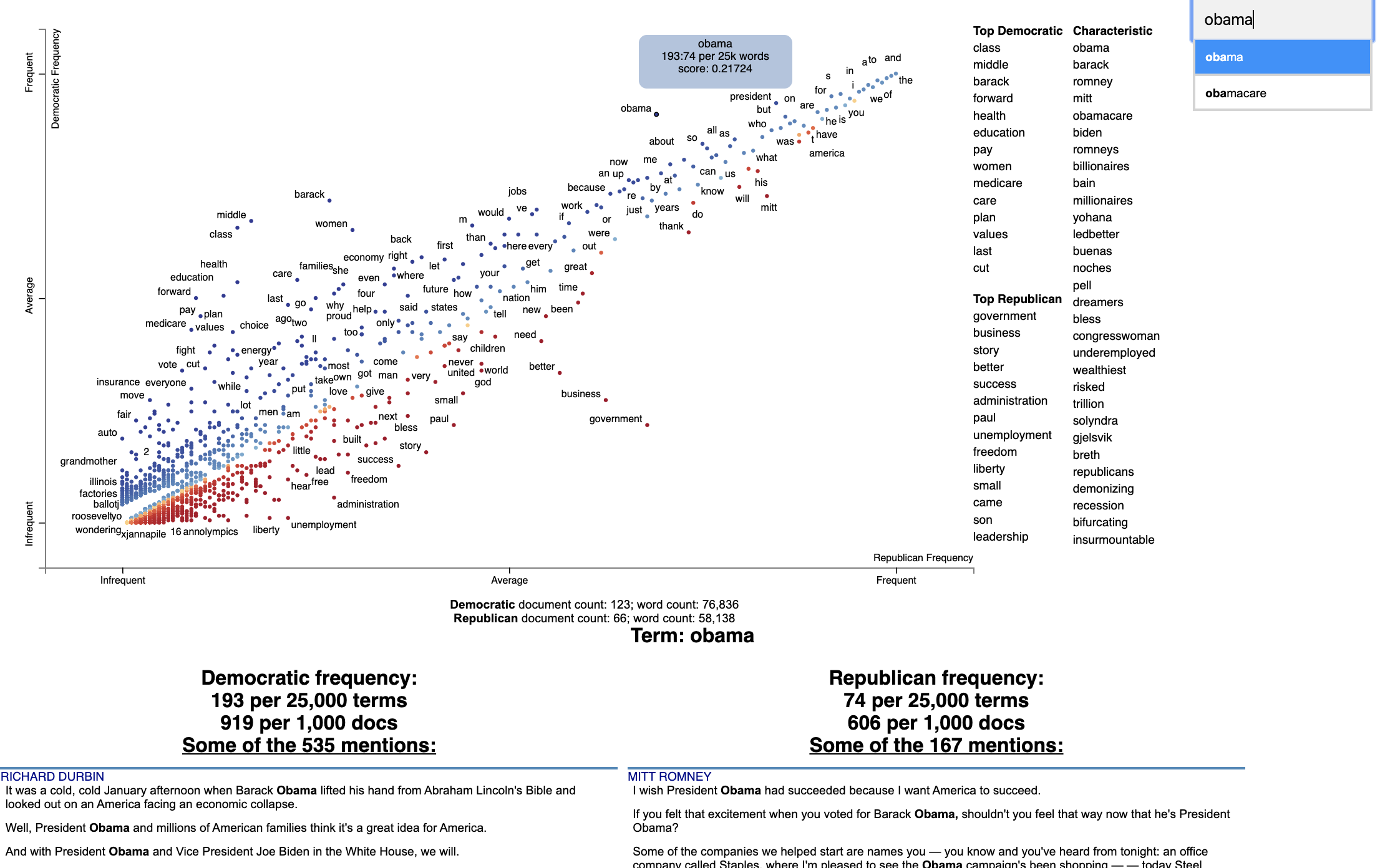

Below is an example of using Scattertext to create visualize terms used in 2012 American political conventions. The 2,000 most party-associated uni grams are displayed as points in the scatter plot. Their x- and y- axes are the dense ranks of their usage by Republican and Democratic speakers respectively.

import scattertext as st

df = st.SampleCorpora.ConventionData2012.get_data().assign(

parse=lambda df: df.text.apply(st.whitespace_nlp_with_sentences)

)

corpus = st.CorpusFromParsedDocuments(

df, category_col='party', parsed_col='parse'

).build().get_unigram_corpus().compact(st.AssociationCompactor(2000))

html = st.produce_scattertext_explorer(

corpus,

category='democrat',

category_name='Democratic',

not_category_name='Republican',

minimum_term_frequency=0,

pmi_threshold_coefficient=0,

width_in_pixels=1000,

metadata=corpus.get_df()['speaker'],

transform=st.Scalers.dense_rank,

include_gradient=True,

left_gradient_term='More Republican',

middle_gradient_term='Metric: Dense Rank Difference',

right_gradient_term='More Democratic',

)

open('./demo_compact.html', 'w').write(html)

The HTML file written would look like the image below. Click on it for the actual interactive visualization.

Citation

Jason S. Kessler. Scattertext: a Browser-Based Tool for Visualizing how Corpora Differ. ACL System Demonstrations. 2017. Link to paper: arxiv.org/abs/1703.00565

@article{kessler2017scattertext,

author = {Kessler, Jason S.},

title = {Scattertext: a Browser-Based Tool for Visualizing how Corpora Differ},

booktitle = {Proceedings of ACL-2017 System Demonstrations},

year = {2017},

address = {Vancouver, Canada},

publisher = {Association for Computational Linguistics},

}

Table of Contents

-

- Help! I don't know Python but I still want to use Scattertext

- Using Scattertext as a text analysis library: finding characteristic terms and their associations

- Visualizing term associations

- Visualizing phrase associations

- Adding color gradients to explain scores

- Visualizing Empath topics and categories

- Visualizing the Moral Foundations 2.0 Dictionary

- Ordering Terms by Corpus Characteristicness

- Document-Based Scatterplots

- Using Cohen's d or Hedge's g to visualize effect size

- Using Cliff's Delta to visualize effect size

- Using Bi-Normal Separation (BNS) to score terms

- Using correlations to explain classifiers

- Using Custom Background Word Frequencies

- Plotting word productivity

-

- Visualizing differences based on only term frequencies

- Visualizing query-based categorical differences

- Visualizing any kind of term score

- Custom term positions

- Emoji analysis

- Visualizing SentencePiece tokens

- Visualizing scikit-learn text classification weights

- Creating lexicalized semiotic squares

- Visualizing topic models

- Creating T-SNE-style word embedding projection plots

- Using SVD to visualize any kind of word embeddings

- Exporting plot to matplotlib

- Using the same scale for both axes

Installation

Install Python 3.11 or higher and run:

$ pip install scattertext

If you cannot (or don't want to) install spaCy, substitute nlp = spacy.load('en') lines with

nlp = scattertext.WhitespaceNLP.whitespace_nlp. Note, this is not compatible

with word_similarity_explorer, and the tokenization and sentence boundary detection

capabilities will be low-performance regular expressions. See demo_without_spacy.py

for an example.

It is recommended you install jieba, spacy, empath, astropy, flashtext, gensim and umap-learn in order to

take full advantage of Scattertext.

Scattertext should mostly work with Python 2.7, but it may not.

The HTML outputs look best in Chrome and Safari.

Style Guide

The name of this project is Scattertext. "Scattertext" is written as a single word

and should be capitalized. When used in Python, the package scattertext should be defined

to the name st, i.e., import scattertext as st.

Overview

This is a tool that's intended for visualizing what words and phrases are more characteristic of a category than others.

Consider the example at the top of the page.

Looking at this seem overwhelming. In fact, it's a relatively simple visualization of word use during the 2012 political convention. Each dot corresponds to a word or phrase mentioned by Republicans or Democrats during their conventions. The closer a dot is to the top of the plot, the more frequently it was used by Democrats. The further right a dot, the more that word or phrase was used by Republicans. Words frequently used by both parties, like "of" and "the" and even "Mitt" tend to occur in the upper-right-hand corner. Although very low frequency words have been hidden to preserve computing resources, a word that neither party used, like "giraffe" would be in the bottom-left-hand corner.

The interesting things happen close to the upper-left and lower-right corners. In the upper-left corner, words like "auto" (as in auto bailout) and "millionaires" are frequently used by Democrats but infrequently or never used by Republicans. Likewise, terms frequently used by Republicans and infrequently by Democrats occupy the bottom-right corner. These include "big government" and "olympics", referring to the Salt Lake City Olympics in which Gov. Romney was involved.

Terms are colored by their association. Those that are more associated with Democrats are blue, and those more associated with Republicans red.

Terms that are most characteristic of the both sets of documents are displayed on the far-right of the visualization.

The inspiration for this visualization came from Dataclysm (Rudder, 2014).

Scattertext is designed to help you build these graphs and efficiently label points on them.

The documentation (including this readme) is a work in progress. Please see the tutorial below as well as the PyData 2017 Tutorial.

Poking around the code and tests should give you a good idea of how things work.

The library covers some novel and effective term-importance formulas, including Scaled F-Score.

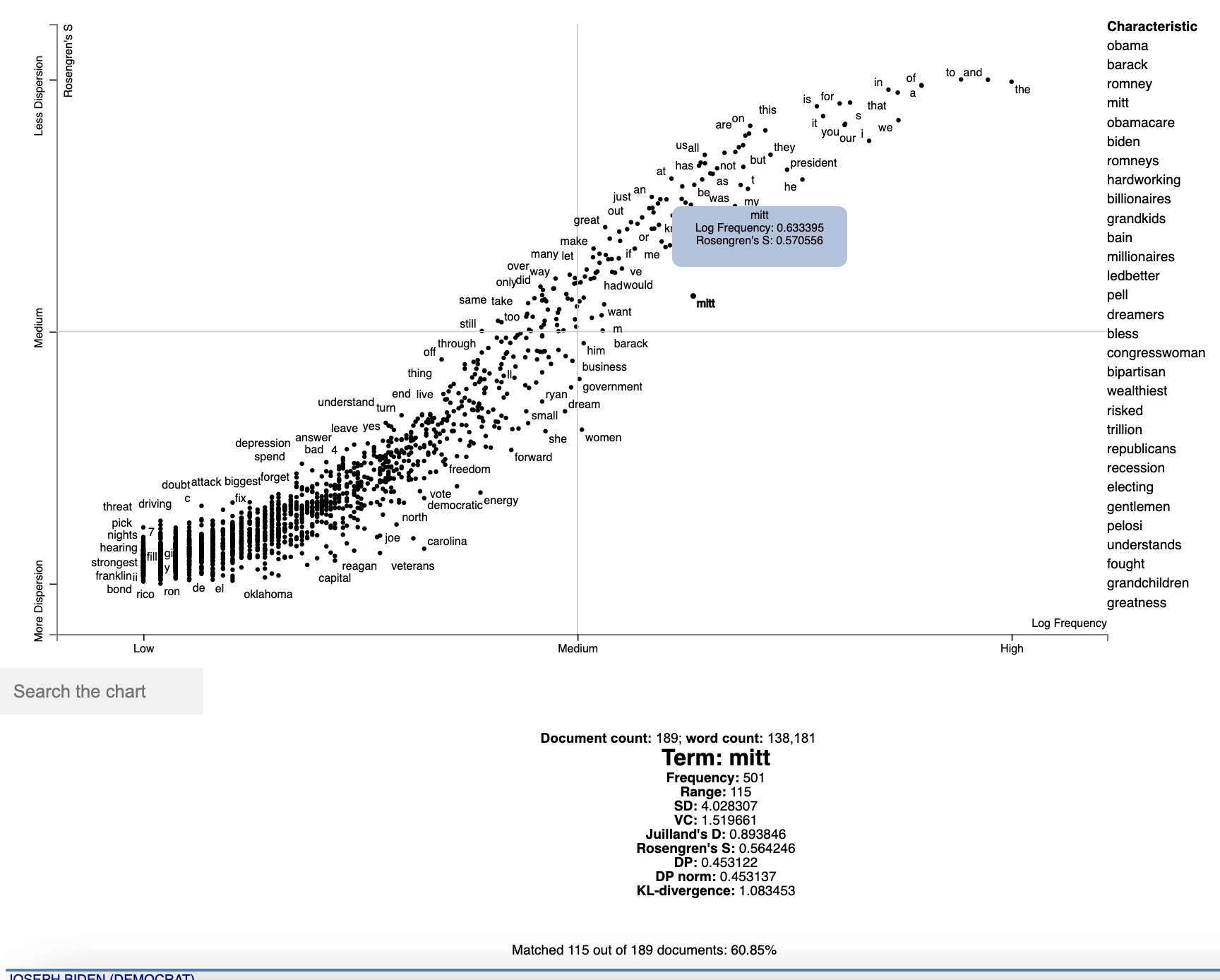

Customizing the Visualization and Plotting Dispersion

New in Scattertext 0.1.0, one can use a dataframe for term/metadata positions and other term-specific data. We can also use it to determine term-specific information which is shown after a term is clicked.

Note that it is possible to disable the use of document categories in Scattertext, as we shall see in this example.

This example covers plotting term dispersion against word frequency and identifying the terms which are most and least dispersed given their frequencies. Using the Rosengren's S dispersion measure (Gries 2021), terms tend to increase in their dispersion scores as they get more frequent. We'll see how we can both plot this effect and factor out the effect of frequency.

This, along with a number of other dispersion metrics presented in Gries (2021), are available and documented

in the Dispersion class, which we'll use later in the section.

Let's start by creating a Convention corpus, but we'll use the CorpusWithoutCategoriesFromParsedDocuments factory

to ensure that no categories are included in the corpus. If we try to find document categories, we'll see that

all documents have the category '_'.

import scattertext as st

df = st.SampleCorpora.ConventionData2012.get_data().assign(

parse=lambda df: df.text.apply(st.whitespace_nlp_with_sentences))

corpus = st.CorpusWithoutCategoriesFromParsedDocuments(

df, parsed_col='parse'

).build().get_unigram_corpus().remove_infrequent_words(minimum_term_count=6)

corpus.get_categories()

# Returns ['_']

Next, we'll create a dataframe for all terms we'll plot. We'll just start by creating a dataframe where we capture the frequency of each term and various dispersion metrics. These will be shown after a term is activated in the plot.

dispersion = st.Dispersion(corpus)

dispersion_df = dispersion.get_df()

dispersion_df.head(3)

Which returns

Frequency Range SD VC Juilland's D Rosengren's S DP DP norm KL-divergence

thank 363 134 3.108113 1.618274 0.707416 0.694898 0.391548 0.391560 0.748808

you 1630 177 12.383708 1.435902 0.888596 0.898805 0.233627 0.233635 0.263337

so 549 155 3.523380 1.212967 0.774299 0.822244 0.283151 0.283160 0.411750

These are discussed in detail in Gries 2021.

We'll use Rosengren's S to find the dispersion of each term. It's which a metric designed for corpus parts (convention speeches in our case) of varying length. Where n is the number of documents in the corpus, s_i is the percentage of tokens in the corpus found in document i, v_i is term count in document i, and f is the total number of tokens in the corpus of type term type.

Rosengren's

S: ^2}{f})

^2}{f})

In order to start plotting, we'll need to add coordinates for each term to the data frame.

To use the dataframe_scattertext function, you need, at a minimum a dataframe with 'X' and 'Y' columns.

The Xpos and Ypos columns indicate the positions of the original X and Y values on the scatterplot, and

need to be between 0 and 1. Functions in st.Scalers perform this scaling. Absent Xpos or Ypos,

st.Scalers.scale would be used.

Here is a sample of values:

st.Scalers.scale(vec)Rescales the vector to where the minimum value is 0 and the maximum is 1.st.Scalers.log_scale(vec)Rescales the lgo of the vectorst.Scalers.dense_ranke(vec)Rescales the dense rank of the vectorst.Scalers.scale_center_zero_abs(vec)Rescales a vector with both positive and negative values such that the 0 value in the original vector is plotted at 0.5, negative values are projected from [-argmax(abs(vec)), 0] to [0, 0.5] and positive values projected from [0, argmax(abs(vec))] to [0.5, 1].

dispersion_df = dispersion_df.assign(

X=lambda df: df.Frequency,

Xpos=lambda df: st.Scalers.log_scale(df.X),

Y=lambda df: df["Rosengren's S"],

Ypos=lambda df: st.Scalers.scale(df.Y),

)

Note that the Ypos column here is not necessary since Y would automatically be scaled.

Finally, since we are not distinguishing between categories, we can set ignore_categories=True.

We can now plot this graph using the dataframe_scattertext function:

html = st.dataframe_scattertext(

corpus,

plot_df=dispersion_df,

metadata=corpus.get_df()['speaker'] + ' (' + corpus.get_df()['party'].str.upper() + ')',

ignore_categories=True,

x_label='Log Frequency',

y_label="Rosengren's S",

y_axis_labels=['Less Dispersion', 'Medium', 'More Dispersion'],

)

Which yields (click for an interactive version):

Note that we can see various dispersion statistics under a term's name, in addition to the standard usage statistics. To

customize the statistics which are displayed, set the term_description_column=[...] parameter with a list of column

names to be displayed.

One issue in this dispersion chart, which tends to be common to dispersion metrics in general, is that dispersion and frequency tend to have a high correlation, but with a complex, non-linear curve. Depending on the metric, this correlation curve could be power, linear, sigmoidal, or typically, something else.

In order to factor out this correlation, we can predict the dispersion from frequency using a non-parametric regressor, and see which terms have the highest and lowest residuals with respect to their expected dispersions based on their frequencies.

In this case, we'll use a KNN regressor with 10 neighbors to predict Rosengren'S from term frequencies

(dispersion_df.X and .Y respectively), and compute the residual.

We'll the residual to color points, with a neutral color for residuals around 0 and other colors for positive and

negative values. We'll add a column in the data frame for point colors, and call it ColorScore. It is populated

with values between 0 and 1, with 0.5 as a netural color on the d3 interpolateWarm color scale. We use

st.Scalers.scale_center_zero_abs, discussed above, to make this transformation.

from sklearn.neighbors import KNeighborsRegressor

dispersion_df = dispersion_df.assign(

Expected=lambda df: KNeighborsRegressor(n_neighbors=10).fit(

df.X.values.reshape(-1, 1), df.Y

).predict(df.X.values.reshape(-1, 1)),

Residual=lambda df: df.Y - df.Expected,

ColorScore=lambda df: st.Scalers.scale_center_zero_abs(df.Residual)

)

Now we are ready to plot our colored dispersion chart. We assign the ColorScore column name to the

访问官网

访问官网 Github

Github 论文

论文