KinD++

这是KinD++的Tensorflow实现。(关于论文: 超越提亮低光图像)

张毅等. 超越提亮低光图像. IJCV, (2021).

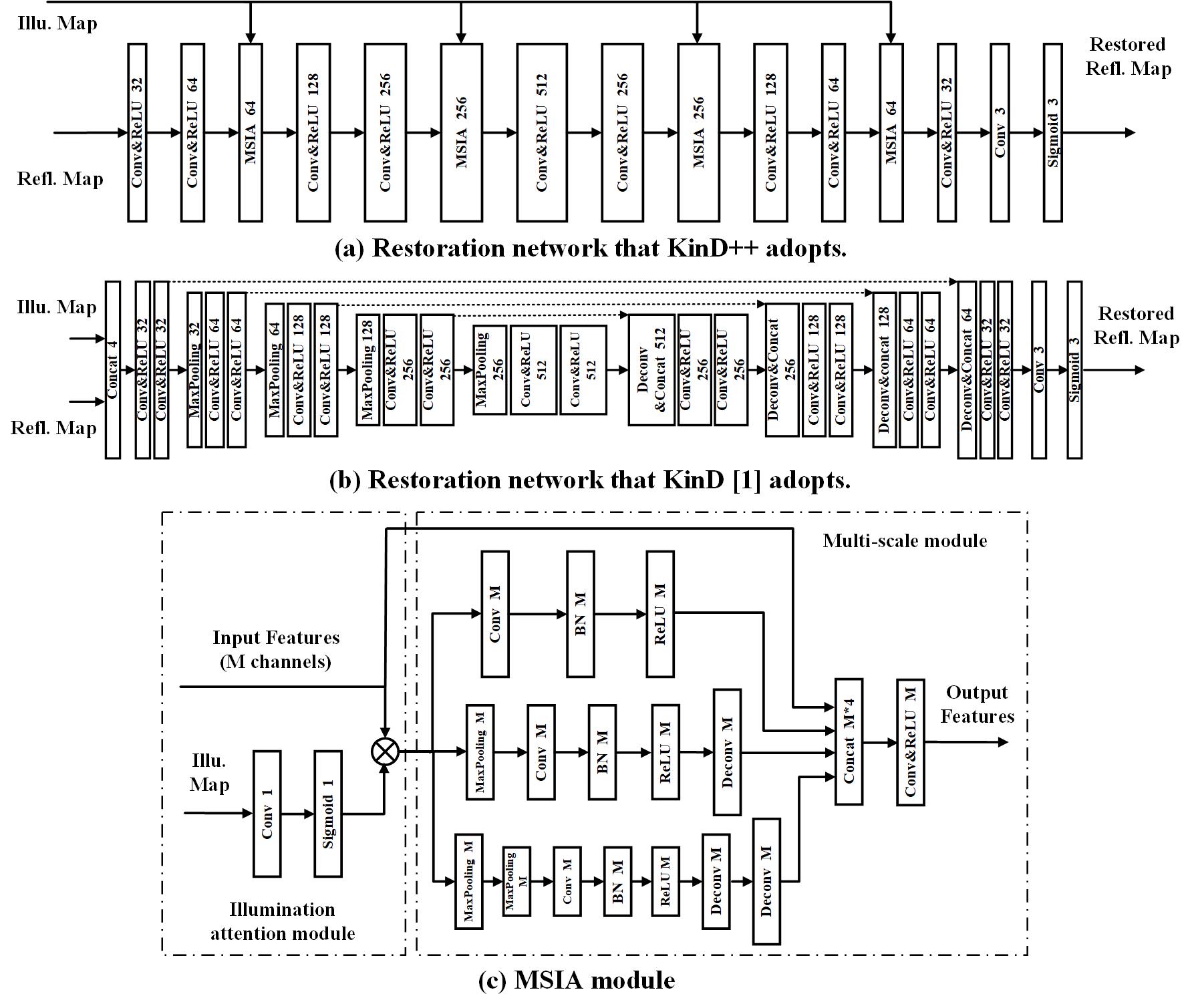

我们提出了一种新颖的多尺度照明注意力模块(MSIA), 可以缓解KinD留下的视觉缺陷(如非均匀斑点和过度平滑)。

KinD网络在以下论文中提出。

在ACM MM 2019中提出了《点燃黑暗: 一个实用的低光照图像增强器》 Yonghua Zhang, Jiawan Zhang, Xiaojie Guo

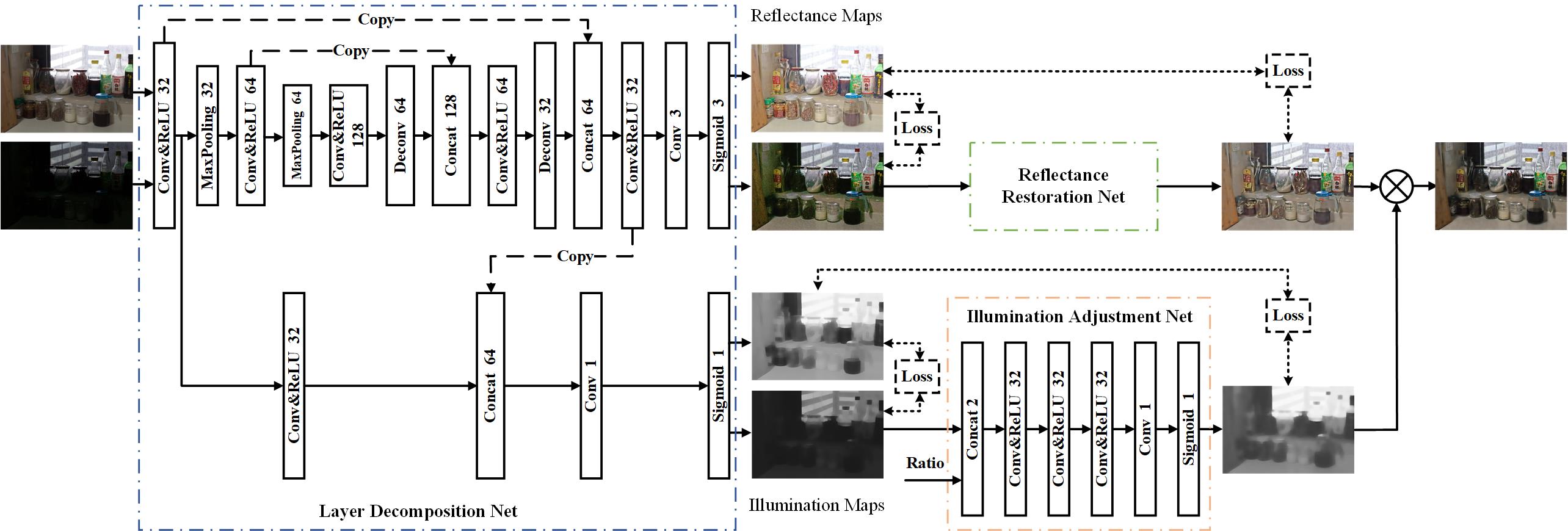

KinD++的网络架构:

反射率恢复网络和MSIA模块:

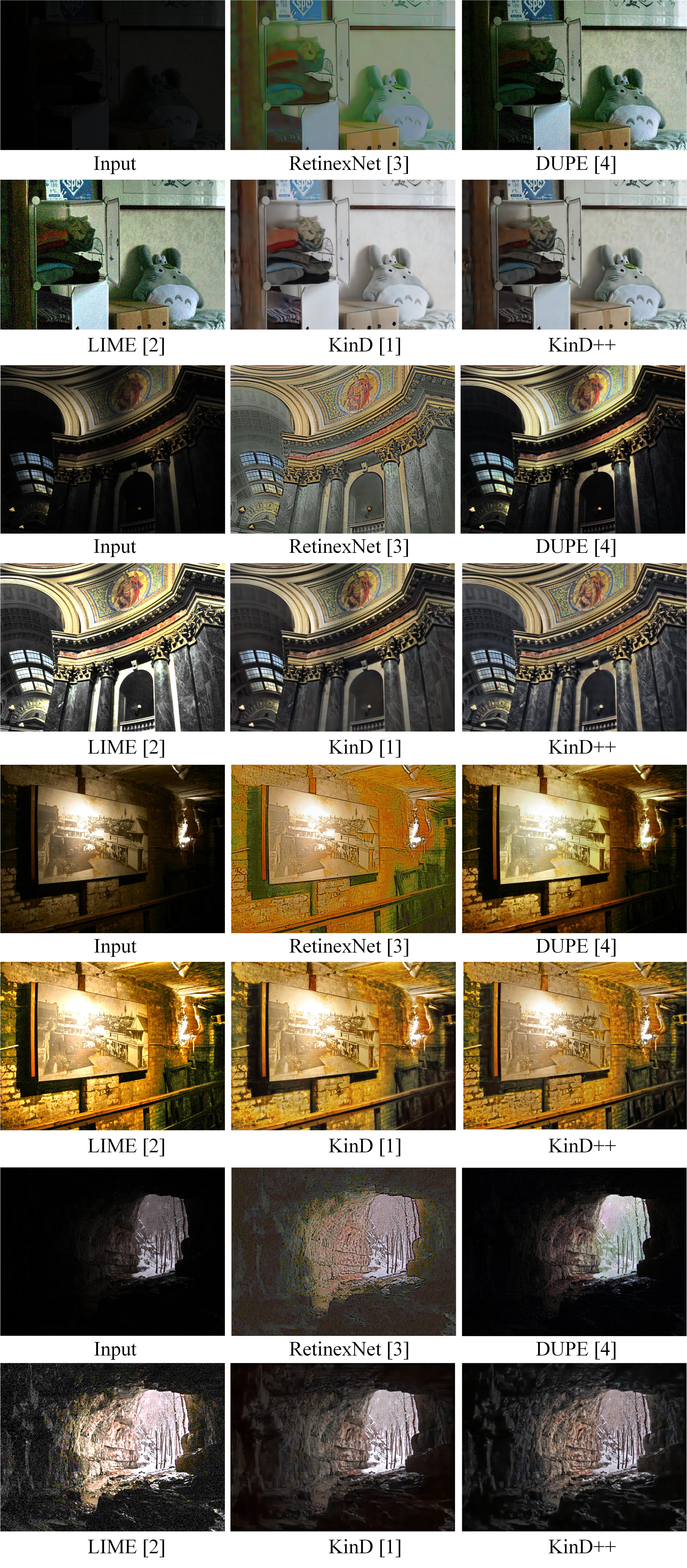

与最先进的低光图像增强方法的可视化比较.

为了更好地适应TensorFlow 2.0, 我们对代码进行了修改。现在您可以直接使用TensorFlow 2.0运行此代码。

要求

- Python

- Tensorflow >= 2.0

- numpy, PIL

测试

请将测试图像放入'test_images'文件夹,并从谷歌网盘或百度网盘下载预训练的检查点,然后运行

python evaluate.py

测试数据集(如DICM、LIME、MEF和NPE)可以从谷歌网盘下载。我们对这些数据集的增强结果可以从谷歌网盘下载。

训练

原始的LOL数据集可以从这里下载。我们重新整理了原始的LOL数据集,并添加了几对全零图像和260对合成图像,以提高分解和恢复结果。训练数据集可以从谷歌网盘下载。对于训练,只需运行

python decomposition_net_train.py

python illumination_adjustment_net_train.py

python reflectance_restoration_net_train.py

您也可以对LOL数据集进行评估,只需运行

python evaluate_LOLdataset.py

低光图像增强方法综述

传统方法:

- 单尺度Retinex (SSR) [5]

- 多尺度Retinex (MSR) [6]

- 自然保留增强(NPE) [7]

- 基于融合的增强方法(MEF) [8]

- LIME [2]

- SRIE [9]

- Dong [10]

- BIMEF [11]

上述方法的__代码__可以从这里找到。 - CRM [12] (代码)

深度学习方法:

NIQE代码

采用无参考指标NIQE进行定量比较。计算NIQE的原始代码在这里。为了提高鲁棒性,我们遵循作者的代码,通过扩展PIRM数据集的100幅高分辨率自然图像,重新训练了模型参数。将原始125幅图像和额外的100幅图像(目录:PIRM_dataset\Validation\Original)放入一个'data'文件夹,然后运行

[mu_prisparam cov_prisparam] = estimatemodelparam('data',96,96,0,0,0.75);

重新训练后,将生成'modelparameters_new.mat'文件。我们使用这个模型来评估所有的结果。

参考文献

[1] Y. Zhang, J. Zhang, and X. Guo, "Kindling the darkness: A practical low-light image enhancer," in ACM MM, 2019, pp. 1632–1640.

[2] X. Guo, Y. Li, and H. Ling, "Lime: Low-light image enhancement via illumination map estimation," IEEE TIP, vol. 26, no. 2, pp. 982–993, 2017.

[3] C. Wei, W. Wang, W. Yang, and J. Liu, "Deep retinex decomposition for low-light enhancement," in BMVC, 2018.

[4] R. Wang, Q. Zhang, C.-W. Fu, X. Shen, W.-S. Zheng, and J. Jia, "Underexposed photo enhancement using deep illumination estimation," in CVPR, 2019, pp. 6849–6857.

[5] D. J. Jobson, Z. Rahman, and G. A. Woodell, "Properties and performance of a center/surround retinex," IEEE TIP, vol. 6, no. 3, pp. 451–462, 1997.

[6] D. J. Jobson, Z. Rahman, and G. A. Woodell, "A multiscale retinex for bridging the gap between color images and the human observation of scenes," IEEE TIP, vol. 6, no. 7, pp. 965–976, 2002.

[7] S. Wang, J. Zheng, H. Hu, and B. Li, "Naturalness preserved enhancement algorithm for non-uniform illumination images," IEEE TIP, vol. 22, no. 9, pp. 3538–3548, 2013.

[8] X. Fu, D. Zeng, H. Yue, Y. Liao, X. Ding, and J. Paisley, "A fusion-based enhancing method for weakly illuminated images," Signal Processing, vol. 129, pp. 82–96, 2016.

[9] X. Fu, D. Zeng, Y. Huang, X. Zhang, and X. Ding, "A weighted variational model for simultaneous reflectance and illumination estimation," in CVPR, 2016, pp. 2782–2790.

[10] X. Dong, Y. Pang, and J. Wen, "Fast efficient algorithm for enhancement of low lighting video," in ICME, 2011, pp. 1–6.

[11] Z. Ying, L. Ge, and W. Gao, "A bio-inspired multi-exposure fusion framework for low-light image enhancement," arXiv: 1711.00591, 2017.

[12] Z. Ying, L. Ge, Y. Ren, R. Wang, and W. Wang, "A new low-light image enhancement algorithm using camera response model," in ICCVW, 2018, pp. 3015–3022.

[13] W. Wang, W. Chen, W. Yang, and J. Liu, "Gladnet: Low-light enhancement network with global awareness," in FG, 2018.

Github

Github 论文

论文