官方YOLOv7

论文实现 - YOLOv7: 可训练的免费技巧集合为实时目标检测器创造新的最先进水平

网页演示

- 已集成到Huggingface Spaces 🤗中,使用Gradio。尝试网页演示

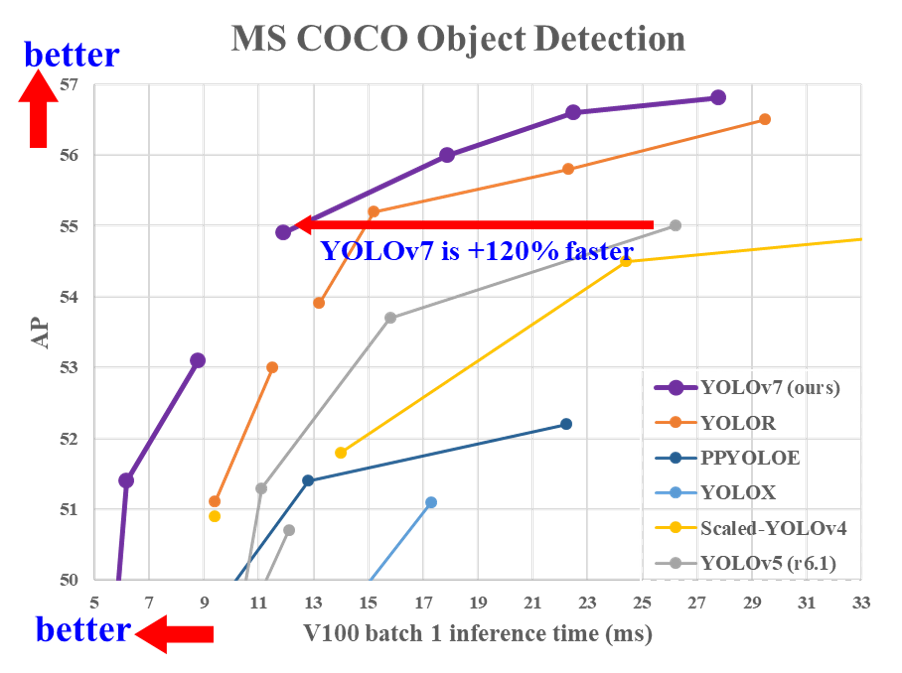

性能

MS COCO

| 模型 | 测试尺寸 | APtest | AP50test | AP75test | batch 1 fps | batch 32 平均时间 |

|---|---|---|---|---|---|---|

| YOLOv7 | 640 | 51.4% | 69.7% | 55.9% | 161 fps | 2.8 ms |

| YOLOv7-X | 640 | 53.1% | 71.2% | 57.8% | 114 fps | 4.3 ms |

| YOLOv7-W6 | 1280 | 54.9% | 72.6% | 60.1% | 84 fps | 7.6 ms |

| YOLOv7-E6 | 1280 | 56.0% | 73.5% | 61.2% | 56 fps | 12.3 ms |

| YOLOv7-D6 | 1280 | 56.6% | 74.0% | 61.8% | 44 fps | 15.0 ms |

| YOLOv7-E6E | 1280 | 56.8% | 74.4% | 62.1% | 36 fps | 18.7 ms |

安装

Docker环境(推荐)

展开

# 创建docker容器,如果你有更多内存,可以更改共享内存大小。

nvidia-docker run --name yolov7 -it -v 你的coco路径/:/coco/ -v 你的代码路径/:/yolov7 --shm-size=64g nvcr.io/nvidia/pytorch:21.08-py3

# apt安装所需包

apt update

apt install -y zip htop screen libgl1-mesa-glx

# pip安装所需包

pip install seaborn thop

# 进入代码文件夹

cd /yolov7

测试

yolov7.pt yolov7x.pt yolov7-w6.pt yolov7-e6.pt yolov7-d6.pt yolov7-e6e.pt

python test.py --data data/coco.yaml --img 640 --batch 32 --conf 0.001 --iou 0.65 --device 0 --weights yolov7.pt --name yolov7_640_val

你将得到以下结果:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.51206

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.69730

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.55521

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.35247

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.55937

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.66693

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.38453

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.63765

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.68772

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.53766

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.73549

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.83868

要测量准确度,请下载COCO-annotations for Pycocotools到./coco/annotations/instances_val2017.json

训练

数据准备

bash scripts/get_coco.sh

单GPU训练

# 训练 p5 模型

python train.py --workers 8 --device 0 --batch-size 32 --data data/coco.yaml --img 640 640 --cfg cfg/training/yolov7.yaml --weights '' --name yolov7 --hyp data/hyp.scratch.p5.yaml

# 训练 p6 模型

python train_aux.py --workers 8 --device 0 --batch-size 16 --data data/coco.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6.yaml --weights '' --name yolov7-w6 --hyp data/hyp.scratch.p6.yaml

多 GPU 训练

# 训练 p5 模型

python -m torch.distributed.launch --nproc_per_node 4 --master_port 9527 train.py --workers 8 --device 0,1,2,3 --sync-bn --batch-size 128 --data data/coco.yaml --img 640 640 --cfg cfg/training/yolov7.yaml --weights '' --name yolov7 --hyp data/hyp.scratch.p5.yaml

# 训练 p6 模型

python -m torch.distributed.launch --nproc_per_node 8 --master_port 9527 train_aux.py --workers 8 --device 0,1,2,3,4,5,6,7 --sync-bn --batch-size 128 --data data/coco.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6.yaml --weights '' --name yolov7-w6 --hyp data/hyp.scratch.p6.yaml

迁移学习

yolov7_training.pt yolov7x_training.pt yolov7-w6_training.pt yolov7-e6_training.pt yolov7-d6_training.pt yolov7-e6e_training.pt

单 GPU 微调自定义数据集

# 微调 p5 模型

python train.py --workers 8 --device 0 --batch-size 32 --data data/custom.yaml --img 640 640 --cfg cfg/training/yolov7-custom.yaml --weights 'yolov7_training.pt' --name yolov7-custom --hyp data/hyp.scratch.custom.yaml

# 微调 p6 模型

python train_aux.py --workers 8 --device 0 --batch-size 16 --data data/custom.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6-custom.yaml --weights 'yolov7-w6_training.pt' --name yolov7-w6-custom --hyp data/hyp.scratch.custom.yaml

重参数化

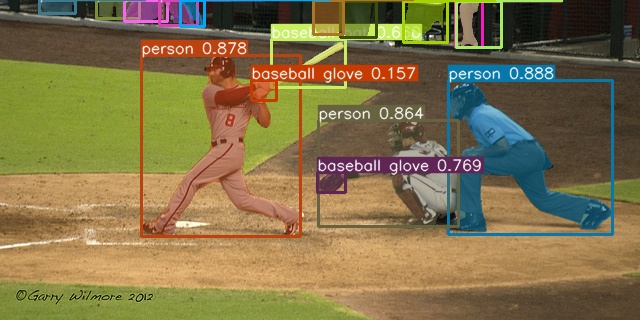

推理

对视频进行推理:

python detect.py --weights yolov7.pt --conf 0.25 --img-size 640 --source yourvideo.mp4

对图片进行推理:

python detect.py --weights yolov7.pt --conf 0.25 --img-size 640 --source inference/images/horses.jpg

导出

Pytorch 转 CoreML (并在 MacOS/iOS 上进行推理)

Pytorch 转 ONNX 并包含 NMS (并进行推理)

python export.py --weights yolov7-tiny.pt --grid --end2end --simplify \

--topk-all 100 --iou-thres 0.65 --conf-thres 0.35 --img-size 640 640 --max-wh 640

Pytorch 转 TensorRT 并包含 NMS (并进行推理)

wget https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-tiny.pt

python export.py --weights ./yolov7-tiny.pt --grid --end2end --simplify --topk-all 100 --iou-thres 0.65 --conf-thres 0.35 --img-size 640 640

git clone https://github.com/Linaom1214/tensorrt-python.git

python ./tensorrt-python/export.py -o yolov7-tiny.onnx -e yolov7-tiny-nms.trt -p fp16

展开

wget https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-tiny.pt

python export.py --weights yolov7-tiny.pt --grid --include-nms

git clone https://github.com/Linaom1214/tensorrt-python.git

python ./tensorrt-python/export.py -o yolov7-tiny.onnx -e yolov7-tiny-nms.trt -p fp16

# 或使用 trtexec 将 ONNX 转换为 TensorRT 引擎

/usr/src/tensorrt/bin/trtexec --onnx=yolov7-tiny.onnx --saveEngine=yolov7-tiny-nms.trt --fp16

测试环境: Python 3.7.13, Pytorch 1.12.0+cu113

姿态估计

参见 keypoint.ipynb。

实例分割 (与 NTU 合作)

参见 instance.ipynb。

实例分割

YOLOv7 用于实例分割 (YOLOR + YOLOv5 + YOLACT)

| 模型 | 测试尺寸 | AP框 | AP50框 | AP75框 | AP掩码 | AP50掩码 | AP75掩码 |

|---|---|---|---|---|---|---|---|

| YOLOv7-seg | 640 | 51.4% | 69.4% | 55.8% | 41.5% | 65.5% | 43.7% |

无锚点检测头

带解耦TAL头的YOLOv7(YOLOR + YOLOv5 + YOLOv6)

| 模型 | 测试尺寸 | AP验证 | AP50验证 | AP75验证 |

|---|---|---|---|---|

| YOLOv7-u6 | 640 | 52.6% | 69.7% | 57.3% |

引用

@inproceedings{wang2023yolov7,

title={{YOLOv7}: 可训练的免费附加功能集为实时目标检测器树立新标准},

author={Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark},

booktitle={IEEE/CVF计算机视觉与模式识别会议论文集(CVPR)},

year={2023}

}

@article{wang2023designing,

title={通过梯度路径分析设计网络设计策略},

author={Wang, Chien-Yao and Liao, Hong-Yuan Mark and Yeh, I-Hau},

journal={信息科学与工程学报},

year={2023}

}

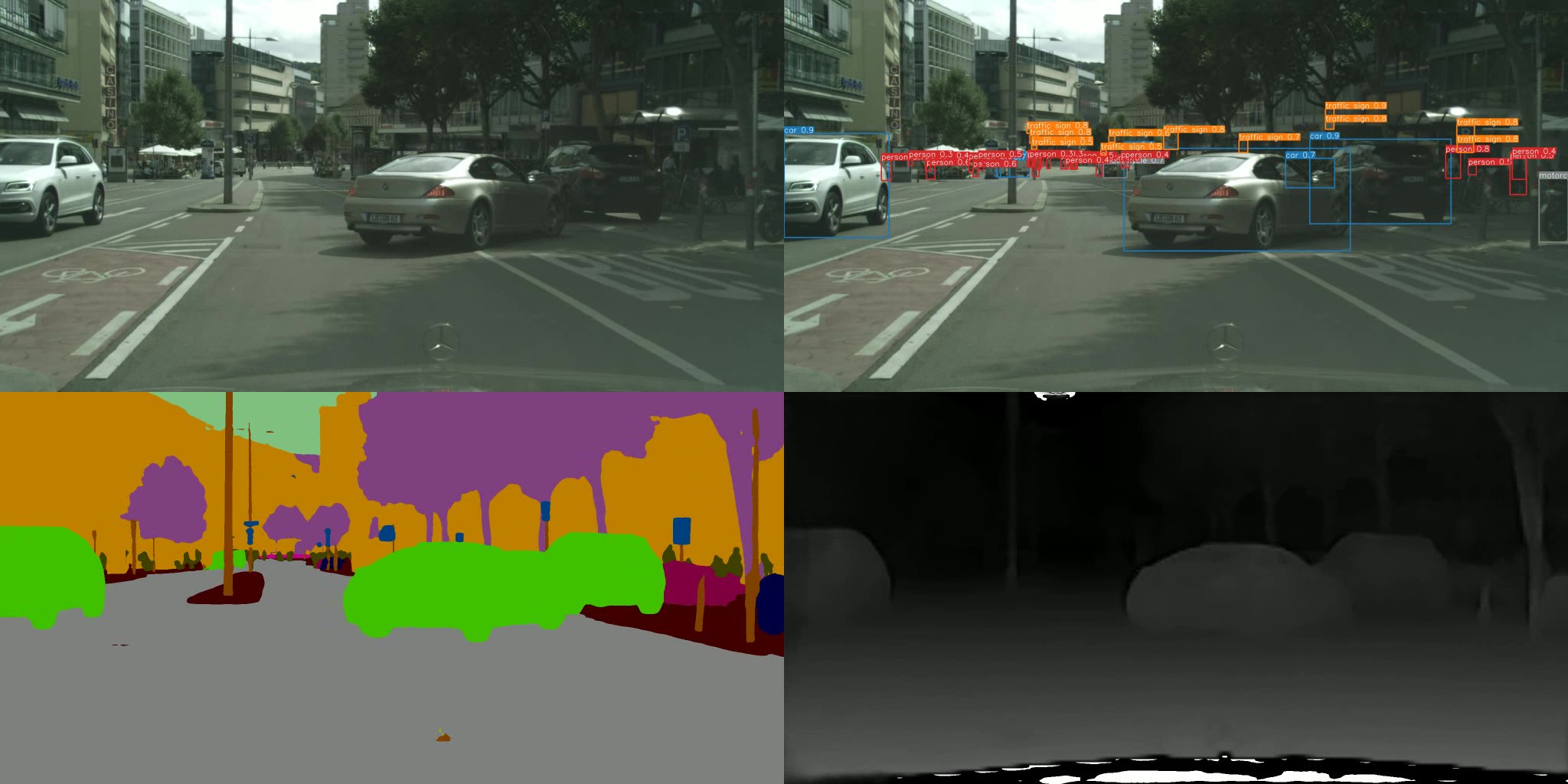

预告

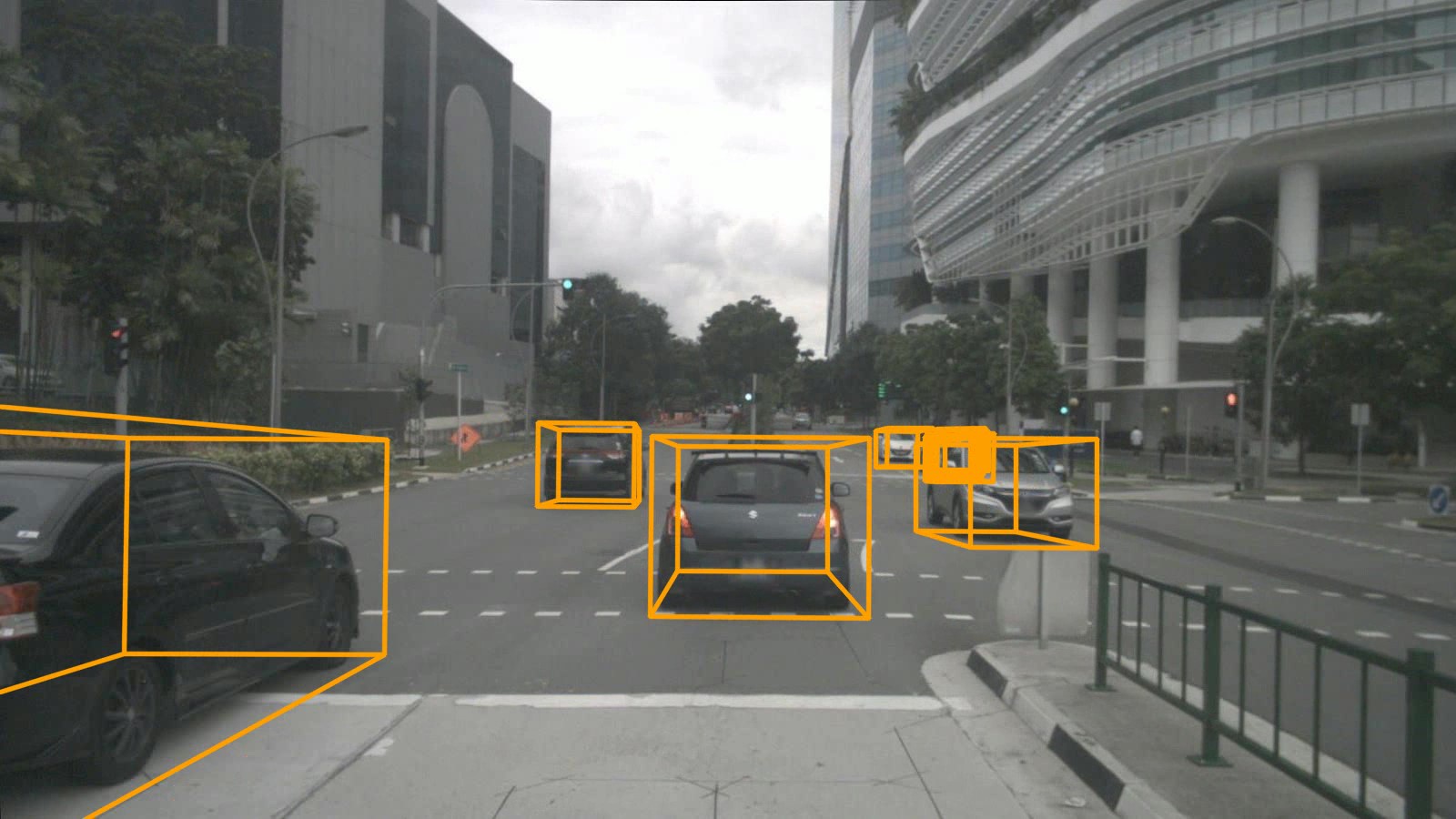

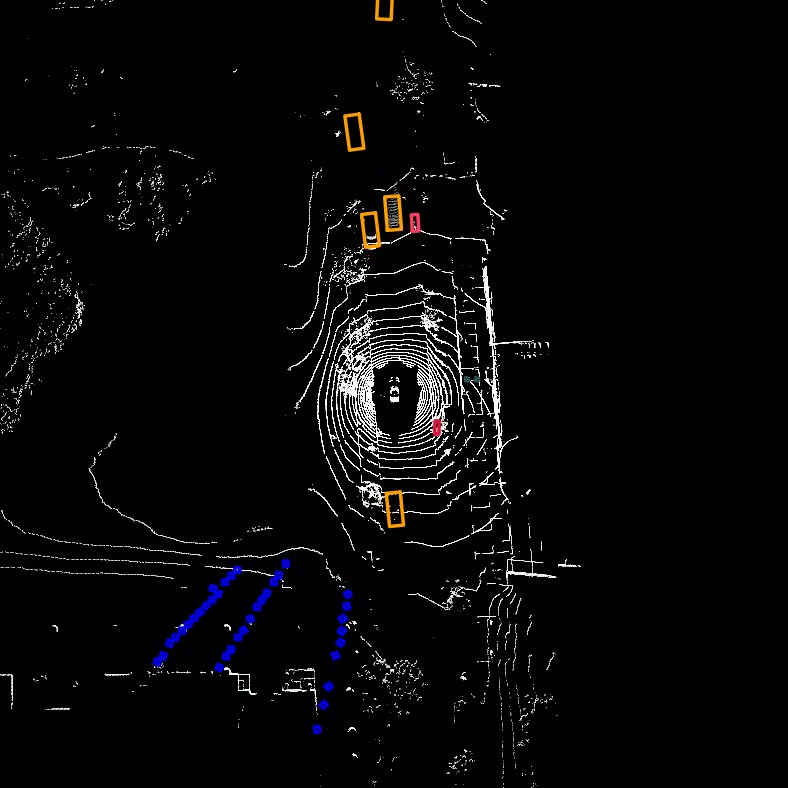

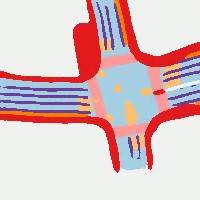

YOLOv7-语义分割 & YOLOv7-全景分割 & YOLOv7-图像描述

YOLOv7-语义分割 & YOLOv7-目标检测 & YOLOv7-深度估计(与NTUT合作)

YOLOv7-3D检测 & YOLOv7-激光雷达 & YOLOv7-道路(与NTUT合作)

致谢

展开

- https://github.com/AlexeyAB/darknet

- https://github.com/WongKinYiu/yolor

- https://github.com/WongKinYiu/PyTorch_YOLOv4

- https://github.com/WongKinYiu/ScaledYOLOv4

- https://github.com/Megvii-BaseDetection/YOLOX

- https://github.com/ultralytics/yolov3

- https://github.com/ultralytics/yolov5

- https://github.com/DingXiaoH/RepVGG

- https://github.com/JUGGHM/OREPA_CVPR2022

- https://github.com/TexasInstruments/edgeai-yolov5/tree/yolo-pose

Github

Github Huggingface

Huggingface 论文

论文