BS-RoFormer

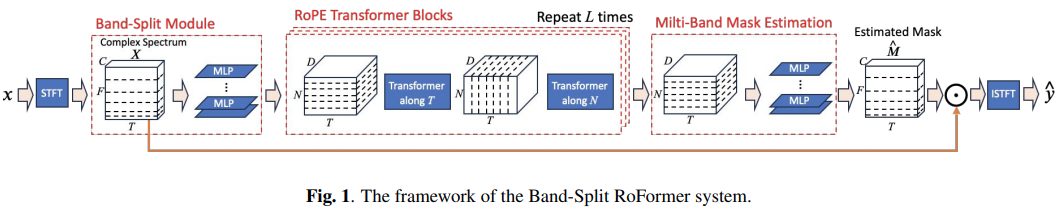

实现了Band Split Roformer,这是字节跳动AI实验室开发的音乐源分离最先进的注意力网络。他们大幅超越了之前的第一名。该技术在频率(因此是多频带)和时间上使用轴向注意力。他们还进行了实验,证明旋转位置编码比学习绝对位置带来了巨大的改进。

它还支持立体声训练和输出多个音轨。

更新2:用于这个凯蒂·佩里的混音!

更新3:Kimberley Jensen已在这里开源了一个经过人声训练的MelBand Roformer!

致谢

-

感谢StabilityAI和🤗 Huggingface的慷慨赞助,以及我的其他赞助商,让我能够独立地开源人工智能。

-

感谢Roee和Fabian-Robert分享他们的音频专业知识并修复音频超参数

-

感谢@chenht2010和Roman解决了默认频带分割超参数的问题!

-

感谢Max Prod报告了Mel-Band Roformer在立体声训练中的一个重大bug!

-

感谢Christopher修复了Mel-Band Roformer中多个音轨的问题

-

感谢Iver Jordal发现默认的stft窗口函数不正确

安装

$ pip install BS-RoFormer

使用方法

import torch

from bs_roformer import BSRoformer

model = BSRoformer(

dim = 512,

depth = 12,

time_transformer_depth = 1,

freq_transformer_depth = 1

)

x = torch.randn(2, 352800)

target = torch.randn(2, 352800)

loss = model(x, target = target)

loss.backward()

# 经过大量训练后

out = model(x)

要使用最近一篇后续论文中提出的Mel-Band Roformer,只需导入MelBandRoformer即可

import torch

from bs_roformer import MelBandRoformer

model = MelBandRoformer(

dim = 32,

depth = 1,

time_transformer_depth = 1,

freq_transformer_depth = 1

)

x = torch.randn(2, 352800)

target = torch.randn(2, 352800)

loss = model(x, target = target) loss.backward()

经过大量训练后

out = model(x)

## 待办事项

- [x] 加入多尺度短时傅里叶变换损失

- [x] 确定`n_fft`应该是多少

- [x] 审查频带分割和掩模估计模块

## 引用

```bibtex

@inproceedings{Lu2023MusicSS,

title = {基于频带分割RoPE Transformer的音乐源分离},

author = {卢韦宗 and 王巨江 and 孔秋强 and 洪韵宁},

year = {2023},

url = {https://api.semanticscholar.org/CorpusID:261556702}

}

@inproceedings{Wang2023MelBandRF,

title = {用于音乐源分离的梅尔频带RoFormer},

author = {王巨江 and 卢韦宗 and Minz Won},

year = {2023},

url = {https://api.semanticscholar.org/CorpusID:263608675}

}

@misc{ho2019axial,

title = {多维Transformer中的轴向注意力},

author = {Jonathan Ho and Nal Kalchbrenner and Dirk Weissenborn and Tim Salimans},

year = {2019},

archivePrefix = {arXiv}

}

@misc{su2021roformer,

title = {RoFormer:具有旋转位置嵌入的增强型Transformer},

author = {苏剑林 and 陆宇 and 潘胜峰 and 文博 and 刘云峰},

year = {2021},

eprint = {2104.09864},

archivePrefix = {arXiv},

primaryClass = {cs.CL}

}

@inproceedings{dao2022flashattention,

title = {Flash{A}ttention:具有{IO}感知的快速且内存高效的精确注意力},

author = {Dao, Tri and Fu, Daniel Y. and Ermon, Stefano and Rudra, Atri and R{\'e}, Christopher},

booktitle = {神经信息处理系统进展},

year = {2022}

}

@article{Bondarenko2023QuantizableTR,

title = {可量化的Transformer:通过帮助注意力头什么都不做来消除异常值},

author = {Yelysei Bondarenko and Markus Nagel and Tijmen Blankevoort},

journal = {ArXiv},

year = {2023},

volume = {abs/2306.12929},

url = {https://api.semanticscholar.org/CorpusID:259224568}

}

@inproceedings{ElNouby2021XCiTCI,

title = {XCiT:交叉协方差图像Transformer},

author = {Alaaeldin El-Nouby and Hugo Touvron and Mathilde Caron and Piotr Bojanowski and Matthijs Douze and Armand Joulin and Ivan Laptev and Natalia Neverova and Gabriel Synnaeve and Jakob Verbeek and Herv{\'e} J{\'e}gou},

booktitle = {神经信息处理系统},

year = {2021},

url = {https://api.semanticscholar.org/CorpusID:235458262}

}

Github

Github Huggingface

Huggingface 文档

文档 论文

论文